Elon Musk made it clear that he doesn’t like Facebook. However, Tesla still uses one piece of technology that comes from Zukenberg’s laboratory. We can bet that it made its way into SpaceX as well and that it was an important part of the recent launch too. This technology has a big advocate in the AI world and that is Yan LeCunn, father of modern Convolutional Neural Networks. That is correct, we are talking about PyTorch.

This deep learning framework is extremely popular among the research community and we can witness that in the past couple of years, it gained popularity in the industry as well. In this chapter, we explore the basic principles of PyTorch and how they can be used for building neural networks.

This bundle of e-books is specially crafted for beginners.

Everything from Python basics to the deployment of Machine Learning algorithms to production in one place.

Become a Machine Learning Superhero TODAY!

PyTorch provides a number of ways to create different types of neural networks. In this article, we create two types of neural networks for image classification. First one is built using only simple feed-forward neural networks and the second one is Convolutional Neural Network. If you want to learn more about machine learning and deep learning in general, check out our Ultimate Guide to Machine Learning with Python.

In this article we cover:

1. Deep Learning Intuition

2. Dataset and Prerequisites

3. Building Feed Forward Neural Network with PyTorch

4. Building Convolutional Neural Networks with PyTorch

1. Deep Learning Intuition

Deep learning and neural networks are big buzzwords of the decade. Neural Networks are based on the elements of the biological nervous system and they try to imitate its behavior. They are composed of small processing units – neurons and weighted connections between them.

The weight of the connection simulates a number of neurotransmitters transferred among neurons. Mathematically, we can define Neural Network as a sorted triple (N, C, w), where N is set of neurons, C is set {(i, j)|i, j ∈ N} whose elements are connections between neurons i and j, and w(i, j) is the weight of the connection between neurons i and j.

Usually, a neuron receives output from many other neurons as its input. The propagation function transforms them into consideration of the connecting weights to the so-called input network of that neuron. Often, this propagation function is just the sum of weighted inputs – weighted sum. Mathematically, it is defined like this: net = Σ (i*w), where the net is the input, i is a value of each individual input and w is a weight of the connection through which input value came.

After, that input is processed the activation function. This function defines what will be displayed on the output of the neuron. Neurons are structured into layers, where every neuron of one layer is connected with all neurons from neighboring layers. Find out more about how neural networks function and learn here.

2. Dataset and Prerequisites

In this article, we implement neural networks for image classification of the Fashion MNIST dataset. So let’s learn a little bit about this dataset. Also, let’s learn how to install PyTorch.

2.1 Fashion MNIST Dataset

This dataset is an “alternative version” of standard MNIST dataset which is often used as a “Hello world” example. In fact, the Fashion MNIST dataset has the same structure as the MNIST dataset, i.e. it has a training set of 60,000 samples and a testing set of 10,000 images of clothes images.

There are 10 categories of clothes. The goal of the neural network is to learn these categories and being able to successfully categorize new images. All images in the dataset have been size-normalized and centered. The size of the images is fixed to 28×28, so the preprocessing image data is minimized. Here is what that looks like:

2.2 Installing PyTorch

Installing PyTorch is quite easy and you can create a command that you need to run for the installation on this page. All you need to do is enter your environment details and run the command. Here is how I’ve set it up for my machine:

To verify that you have installed it correctly, run Jupyter Notebook and try to import torch:

import torch

torch.__version__'1.9.0'3. Building Feed Forward Neural Networks with PyTorch

Finally, let’s start with the PyTorch implementation of neural networks. First, we need to import all necessary modules:

import torch

import torchvision

import numpy as np

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

from torchvision.datasets import FashionMNIST

from torchvision.transforms import ToTensor

from torchvision.utils import make_grid

from torch.utils.data.dataloader import DataLoader

from torch.utils.data import random_split

%matplotlib inlineYou can see that we are pretty much only using PyTorch modules (except from NumPy and Matplotlib). Using nn module, we are able to create different neural network layers, and using nn.functional we can implement different activation functions. Apart from PyTorch libraries, we use some modules from torchvision library. Namely, we use the Fashion MNIST module, which contains FashionMNIST data.

3.1 Loading Data with PyTorch

So, let’s load this data:

data = FashionMNIST(root='data/', download=True, transform=ToTensor())

validation_size = 10000

train_size = len(data) - validation_size

train_data, val_data = random_split(data, [train_size, validation_size])

batch_size=128

train_loader = DataLoader(train_data, batch_size, shuffle=True, num_workers=4, pin_memory=True)

val_loader = DataLoader(val_data, batch_size*2, num_workers=4, pin_memory=True)First, we create an object of the Fashion MNIST class, which essentially contains all the necessary data. We split this data into training and validation sets. Training data is used during the training process of supervised learning, which is a method neural networks use to learn from the data.

Validation of data is also used during the training process to evaluate how well neural networks perform. Usually, we would create one test set too, for final evaluation of neural network performance on thus far unseen data, but for this simple tutorial, this is enough. Then we use DataLoader class to shuffle data and separate it into batches that are feed to neural networks during each training step.

3.2 Feed Forward Neural Network with PyTorch

Ok, to the fun stuff, let’s build a neural network with PyTorch. Here is the complete FFNN class:

class FFNN(nn.Module):

"""Simple Feed Forward Neural Network with n hidden layers"""

def __init__(self, input_size, num_hidden_layers, hidden_size, out_size, accuracy_function):

super().__init__()

self.accuracy_function = accuracy_function

# Create first hidden layer

self.input_layer = nn.Linear(input_size, hidden_size)

# Create remaining hidden layers

self.hidden_layers = nn.ModuleList()

for i in range(0, num_hidden_layers):

self.hidden_layers.append(nn.Linear(hidden_size, hidden_size))

# Create output layer

self.output_layer = nn.Linear(hidden_size, out_size)

def forward(self, input_image):

# Flatten image

input_image = input_image.view(input_image.size(0), -1)

# Utilize hidden layers and apply activation function

output = self.input_layer(input_image)

output = F.relu(output)

for layer in self.hidden_layers:

output = layer(output)

output = F.relu(output)

# Get predictions

output = self.output_layer(output)

return output

def training_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

return loss

def validation_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

# Calculate accuracy

acc = self.accuracy_function(output, labels)

return {'val_loss': loss, 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

# Combine losses and return mean value

epoch_loss = torch.stack(batch_losses).mean()

# Combine accuracies and return mean value

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean()

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch: {} - Validation Loss: {:.4f}, Validation Accuracy: {:.4f}".format( \

epoch, result['val_loss'], result['val_acc']))Essentially, when you want to build some model using PyTorch you can inherit nn.Module class. This way you can just by overriding several methods create different types of neural networks. This is one of the main reasons why PyTorch is so popular in the research community because it gives you “precooked” solutions with enough flexibility.

We utilize that to create a model that receives several parameters through the constructor. It receives input size (ie. number of neurons in the input layer), number hidden layers and their size, output size (ie. number of neurons in the output layer/number of categories), and the activation function that is going to be used in each layer.

In order for PyTorch to know that model has certain layers, you need to create a class attribute for each layer. That is why we create self.input_layer and self.output_layer attributes.

Note that for hidden layers we use a different approach. We create an array of layers with nn.ModuleList(), since this is configurable through num_hidden_layers parameter. For every layer we use nn.Linear, which creates a simple layer with a defined number of neurons. Layers of this type perform simple y = wx + b function.

Apart from this, we need to override one important nn.Module method – forward. This function defines how the input will be processed in our neural network. This function basically connects all layers we defined in the constructor. Let’s examine it in more details:

def forward(self, input_image):

# Flatten image

input_image = input_image.view(input_image.size(0), -1)

# Utilize hidden layers and apply activation function

output = self.input_layer(input_image)

output = F.relu(output)

for layer in self.hidden_layers:

output = layer(output)

output = F.relu(output)

# Get predictions

output = self.output_layer(output)

return output

3.3 Training Feed Forward Neural Network with PyTorch

First, we flatten the image, meaning we reshape it into an array. We do this because the input layer of our neural network can not receive 2D inputs. Then pass this information through each linear layer and apply the rectifier or ReLu activation function.

This activation function is most commonly used for hidden layers since it gives the best results. It is defined with the formula relu(x) = max(0,x). Note that we don’t use ReLu after the output layer. This is because on the output we expect to get probabilities for each category.

Apart from the forward function, there are various other methods we implement in order to better control the training of the network. Methods training_step and validation_step define what is done during every training and validation pass:

def training_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

return loss

def validation_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

# Calculate accuracy

acc = self.accuracy_function(output, labels)

return {'val_loss': loss, 'val_acc': acc}The training_step function takes the batch of images that are provided by the DataLoader and pushes them through the network to get the prediction. Underneath, PyTorch uses forward function for this. Once this is done, we detect how well the neural network performed by calculating loss.

The different functions can be used to measure the difference between predicted data and real data. In this example, we use cross-entropy. Method validation_step looks similar, but this method also calculates the accuracy of our predictions using accuracy_function we passed through the constructor and stores loss and returns the dictionary with this information.

Once the validation epoch ends we combine all these into an array, so we can see the history of the training process. Also at the end of every epoch, we print out information validation_step returned. Last two functionalities are implemented within validation_epoch_end and epoch_end methods:

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

# Combine losses and return mean value

epoch_loss = torch.stack(batch_losses).mean()

# Combine accuracies and return mean value

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean()

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch: {} - Validation Loss: {:.4f}, Validation Accuracy: {:.4f}".format( \

epoch, result['val_loss'], result['val_acc']))In order to automate the training process of the neural networks, we implement one more class ModelTrainer:

class ModelTrainer():

def fit(self, epochs, learning_rate, model, train_loader, val_loader, opt_func=torch.optim.SGD):

history = []

optimizer = opt_func(model.parameters(), learning_rate)

for epoch in range(epochs):

# Training

for batch in train_loader:

loss = model.training_step(batch)

loss.backward()

optimizer.step()

optimizer.zero_grad()

# Validation

result = self._evaluate(model, val_loader)

model.epoch_end(epoch, result)

history.append(result)

return history

def _evaluate(self, model, val_loader):

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)This class has two methods fit and _evaluate. Method fit is used for training. It receives a model, number of epochs (number of times the whole dataset will be passed through the network), learning rate and data loaders. For each epoch, we get baches from the loader and run it through the network by calling the training_step method. Then we get the loss and use the backward method to calculate gradients. Finally, we use the optimizer to update the weights of the network.

Alright, those are the classes that describe the general neural network and general training process. We need to get more specific and utilize this class for our problem. To do so, we first need to implement accuracy function:

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))Also, we define helper function for ploting history:

def plot_history(history):

losses = [x['val_loss'] for x in history]

plt.plot(losses, '-x')

plt.xlabel('Epoch')

plt.ylabel('Loss')

accuracies = [x['val_acc'] for x in history]

plt.plot(accuracies, '-x')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.title('Loss and Accuracy');3.4 Running it all Together

Finally, we can put all these pieces together and create the object of FFNN. We create an neural network with 3 hidden layers and with 32 neurons in each hidden layer. Note that the input size is 28×28=784 and the output size is 10 since we have 10 categories of clothes:

input_size = 784

num_classes = 10

model = FFNN(input_size, num_hidden_layers, 32, out_size=num_classes, accuracy_function=accuracy)

print(model)FFNN(

(input_layer): Linear(in_features=784, out_features=32, bias=True)

(hidden_layers): ModuleList(

(0): Linear(in_features=32, out_features=32, bias=True)

(1): Linear(in_features=32, out_features=32, bias=True)

(2): Linear(in_features=32, out_features=32, bias=True)

)

(output_layer): Linear(in_features=32, out_features=10, bias=True)

)Let’s train it and plot the history and accuracy:

model_trainer = ModelTrainer()

training_history = []

training_history += model_trainer.fit(5, 0.2, model, train_loader, val_loader)

plot_history(training_history)Epoch: 0 - Validation Loss: 0.7095, Validation Accuracy: 0.7266

Epoch: 1 - Validation Loss: 0.5858, Validation Accuracy: 0.7959

Epoch: 2 - Validation Loss: 0.5417, Validation Accuracy: 0.7789

Epoch: 3 - Validation Loss: 0.4696, Validation Accuracy: 0.8247

Epoch: 4 - Validation Loss: 0.4303, Validation Accuracy: 0.8371

Notice how loss is getting lower and the accuracy is getting better. In the end after only 5 epochs we reached accuracy of 83%.

4. Building Convolutional Neural Networks with PyTorch

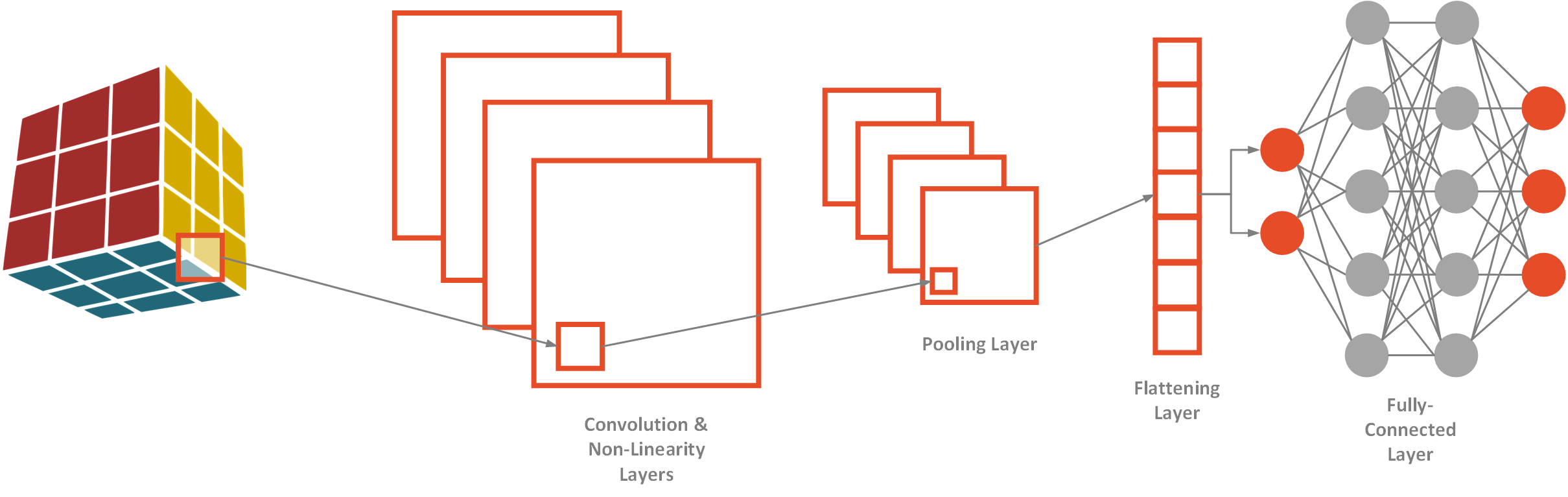

If you want to process and classify images one of the best ways to do so is by using Convolutional Neural Networks. This type of network is in a way responsible for deep learning hype in the past couple of years. In the end, they use feed-forward neural networks, but they have a couple of tricks for image processing.

At its core, we can find the convolution process. This process is used for making detecting features of the images and uses this information for classification. Here is how the complete architecture of Convolutional Neural Networks looks like:

First, convolution layers detect features (line, curve, etc) of the image using filters. They create so-called feature maps that contain information about where in the image certain feature is located. These maps are further compressed by the pooling layers after which are flattened into 1D array. Finally, a feed-forward network is used for classification, which is in this context called fully connected.

PyTorch nn module provides a number of other layer trypes, apart from the Linear that we already used. Here is how we can implement the process described above:

class CNN(nn.Module):

"""Simple Convolutional Neural Network"""

def __init__(self, accuracy_function):

super().__init__()

self.accuracy_function = accuracy_function

# Create Convolutional Layers

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

# Create Fully Connected Layers

self.fc1 = nn.Linear(1600, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, input_image):

# Convolution, ReLu and MaxPooling

output = self.conv1(input_image)

output = F.relu(output)

output = F.max_pool2d(output, (2, 2))

output = self.conv2(output)

output = F.relu(output)

output = F.max_pool2d(output, 2)

# Flatten

output = output.view(-1, self.num_flat_features(output))

# Fully Connected

output = self.fc1(output)

output = F.relu(output)

output = self.fc2(output)

return output

def training_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

return loss

def validation_step(self, batch):

# Load batch

images, labels = batch

# Generate predictions

output = self(images)

# Calculate loss

loss = F.cross_entropy(output, labels)

# Calculate accuracy

acc = self.accuracy_function(output, labels)

return {'val_loss': loss, 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

# Combine losses and return mean value

epoch_loss = torch.stack(batch_losses).mean()

# Combine accuracies and return mean value

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean()

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch: {} - Validation Loss: {:.4f}, Validation Accuracy: {:.4f}".format( \

epoch, result['val_loss'], result['val_acc']))

def num_flat_features(self, image):

size = image.size()[1:]

num_features = 1

for s in size:

num_features *= s

return num_featuresThe difference from FFNN are located in the constructor and the forward method. We know upfront which layers we want to use and we add two convolutional layers using Conv2d class and two fully connected layers using Linear class like before.

In the forward function we use max_pool2d function to perform max pooling. Other methods are the same as for the FFNN implementation. We can utilize ModelTrainer that we already implemented befre and train this network:

input_size = 784

num_classes = 10

model = CNN(accuracy_function=accuracy)

print(model)CNN(

(conv1): Conv2d(1, 32, kernel_size=(3, 3), stride=(1, 1))

(conv2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1))

(fc1): Linear(in_features=1600, out_features=128, bias=True)

(fc2): Linear(in_features=128, out_features=10, bias=True)

)model_trainer = ModelTrainer()

training_history = []

training_history += model_trainer.fit(5, 0.2, model, train_loader, val_loader)

plot_history(training_history)Epoch: 0 - Validation Loss: 0.5739, Validation Accuracy: 0.7846

Epoch: 1 - Validation Loss: 0.3864, Validation Accuracy: 0.8598

Epoch: 2 - Validation Loss: 0.4431, Validation Accuracy: 0.8344

Epoch: 3 - Validation Loss: 0.3124, Validation Accuracy: 0.8859

Epoch: 4 - Validation Loss: 0.3125, Validation Accuracy: 0.8873We got a bit better results than with feed-forward neural networks. Accuracy is 88%. We can further improve these results by adding more convolutional layers, training networks longer, and modify the learning late. Give it a try.

Conclusion

In this article, we presented two implementations of neural networks for image classification using PyTorch. We explored some basic neural networks concepts and learned about Convolutional Neural Networks.

Thank you for reading!

Nikola M. Zivkovic

CAIO at Rubik's Code

Nikola M. Zivkovic a CAIO at Rubik’s Code and the author of book “Deep Learning for Programmers“. He is loves knowledge sharing, and he is experienced speaker. You can find him speaking at meetups, conferences and as a guest lecturer at the University of Novi Sad.

Rubik’s Code is a boutique data science and software service company with more than 10 years of experience in Machine Learning, Artificial Intelligence & Software development. Check out the services we provide.

Trackbacks/Pingbacks