It is always fun and educational to read deep learning scientific papers. Especially if it is in the area of the current project that you are working on. However, often these papers contain architectures and solutions that are hard to train. Especially if you want to try out, let’s say, some of the winners of the ImageNet Large Scale Visual Recognition (ILSCVR) competition. I can remember reading about VGG16 and thinking “That is all cool, but my GPU is going to die”. However, we can overcome that and use the most prestigious architecture thanks to the concept of transfer learning. In this article, we explore transfer learning with famous Convolutional Neural Networks and how it can be achieved with ML.NET.

Are you afraid that AI might take your job? Make sure you are the one who is building it.

STAY RELEVANT IN THE RISING AI INDUSTRY! 🖖

The topics covered in this article are:

1. Dataset and Prerequisites

In this article, we use the “Cats vs Dogs” dataset. This dataset contains 23,262 images of cats and dogs. It is available at Kaggle.

When we unzip the folder we can notice two folders train1 and test. The train1 folder contains training images that we use in this tutorial. Notice that image names start with cat or dog. These are essentially our labeles, which means that target will be defined using these names.

The implementations provided here are done in C#, and we use the latest .NET 5. So make sure that you have installed this SDK. If you are using Visual Studio this comes with version 16.8.3. Also, make sure that you have installed the following packages:

$ dotnet add package Microsoft.ML

$ dotnet add package Microsoft.ML.ImageAnalytics

$ dotnet add package Microsoft.ML.Vision

$ dotnet add package SciSharp.TensorFlow.Redist -v 2.3.1You can do the same from the Package Manager Console:

Install-Package Microsoft.ML

Install-Package Microsoft.ML.ImageAnalytics

Install-Package Microsoft.ML.Vision

Install-Package SciSharp.TensorFlow.Redist -Version 2.3.1IMPORTANT NOTE: Unfortunately we couldn’t make this solution work with the latest SciSharp.TensorFlow.Redist version (in the moment that is version 2.4.1). In order for this tutorial to work, make sure you are using the 2.3.1 version of the mentioned NuGet package.

You can do a similar thing using Visual Studio’s Manage NuGetPackage option:

If you need to catch up with the basics of machine learning with ML.NET check out this article.

2. Transfer Learning Intuition

Historically, image classification is a problem that popularized deep neural networks especially visual types of neural networks – Convolutional neural networks (CNN). We will not go into details about what are CNNs and how they work. However, we can say that CNNs were popularized after they broke a record in The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) back in 2012. This competition evaluates algorithms for object detection and image classification at a large scale. The dataset that they provide contains 1000 image categories and over 1.2 million images. The goal of the image classification algorithm is to correctly predict to which class the object belongs to. Since 2012. every winner of this competition used CNNs.

Training deep neural networks can be computational and time-consuming. To get really good results, you need a lot of computing power, which means a lot of GPUs and this means…well, a lot of money. You could of course train these big architectures and get SOTA results on cloud environments, but this is also quite expensive. For a while, these architectures were not available for regular developers. However, the concept of transfer learning changed that. Especially, for the problem, we are solving today – image classification. Today we can use state-of-the-art architectures that won at ImageNet competition thanks to the transfer learning and pre-trained models.

At this moment one might wonder “What are pre-trained models?”. Essentially, a pre-trained model is a saved network that was previously trained on a large dataset, for example on the ImageNet dataset. There are two ways in which you can use those. You can use it as the out-of-the-box solution and or you can use it with transfer learning. Since large datasets are usually used for some global solution you can customize a pre-trained model and specialize it for certain problems. This way you can utilize some of the most famous neural networks without losing too much time and resources on training. Additionally, you can fine-tune these models, by modifying the behavior of the chosen layers. The whole idea revolves around using lower layers of pre-trained CNN model and adding additional layers that will customize the architecture for the specific problems.

Essentially, serious image classification solutions are usually composed of two parts. We call them backbone and head. Backbone is usually deep architecture that was pre-trained on the ImageNet dataset without top layers. Head is a part of the image classification model that is used for the prediction of custom classes. These layers are added on top of the pre-trained model. With these systems, we have two phases: bottleneck and training phase. During the bottleneck phase, images of the specific dataset are run through the backbone architecture, and results are stored. During the training phase stored output from the backbone is used to train custom layers.

3. Architectures

Over the years several architectures that won the ImageNet competition became quite popular. Some of them are: VGG16, GoogLeNet (Inception), ResNet. However, not only these architectures are popular for transfer learning. For example, MobileNet is also often used, because it is easy to train. Let’s dig a little deeper and learn more about each of these architectures.

VGG16 is the first architecture we consider. It is a large convolutional neural network proposed by K. Simonyan and A. Zisserman in the paper “Very Deep Convolutional Networks for Large-Scale Image Recognition”. this network achieves 92.7% top-5 test accuracy in ImageNet dataset. However, it was trained for weeks. Here is high-level overview of the model:

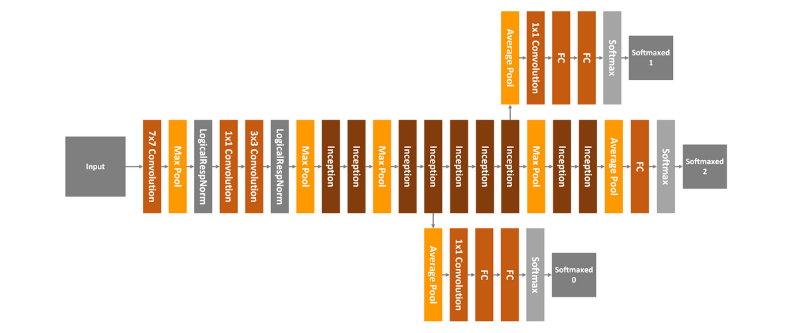

GoogLeNet is also called Inception. This is because it utilizes two concepts: 1×1 Convolution and Inception Module. The first concept, 1×1 Convolution is used for as a dimension reduction module. By reducing number of dimensions, number of computations also goes down, which means that depth and width of the network can be increased. Instead of using fixed size for each convolution layer, GoogLeNet uses Inception Module:

As you can see 1×1 convolution layer, 3×3 convolution layer, 5×5 convolution layer, and 3×3 max pooling layer perform their operations together and than their results are stack together again at output. GoogLeNet has 22 layer in total, and it looks something like this:

Residual Networks or ResNet are the final architecture we are going to use in this article. The problem that previous architecture have is that they are very deep. They have a lot of layers and because of that they are hard to train (vanishing gradient). So, ResNet addressed that problem with so-called “identity shortcut connection”, or residual blocks:

In essence, ResNet follows VGG’s 3×3 convolutional layer design, where each convolutional layer is followed by a batch normalization layer and ReLU activation function. The difference is however that we before the final ReLu, ResNet injects input. One of the variations is that, input value is passes through the 1×1 convolution layer.

The core idea is that deeper network should not produce a training error higher than shallower network. Authors of the ResNet hypothesize that if you add layers that don’t do anything to a network error should stay the same. This means that letting the network fit a residual is easier than letting them directly fit the complete desired data. This is accomplished by residual blocks.

This is how the complete ResNet architecture looks like:

MobileNet is a family of general purpose computer vision neural networks designed with mobile devices in mind to support classification, detection and more. In this article we use MobileNetV2.

4. ML.NET and TensorFlow

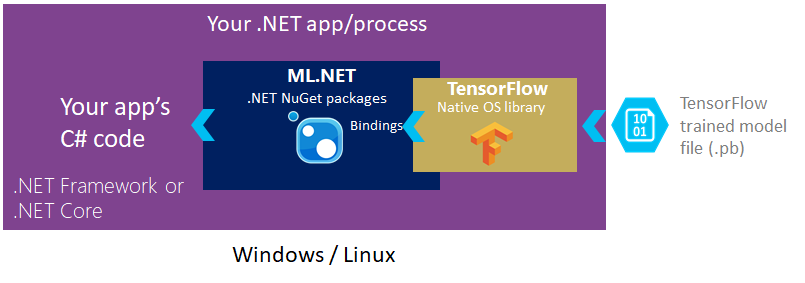

ML.NET doesn’t provide a way to build neural network, aprat from simple perceptron. Essentially, it is not a tool for that, like TensorFlow and Pythorch are in Python. If you are looking for a way to build deep neural network form scratch in .NET, you can check out SciSharp Stack. However, you can use ML.NET in combination with TensorFlow (TensorFlow.NET to be more precise) to use pre-trained models that are TensorFlow provides.

In a nutshell, we add a reference to the ML.NET NuGet packages in .NET applications and ML.NET includes and references the native TensorFlow library. This gives us the possibility to utilize TensorFlow’s pretrained models.

5. Implementation with ML.NET

5.1 High-Level Architecutre

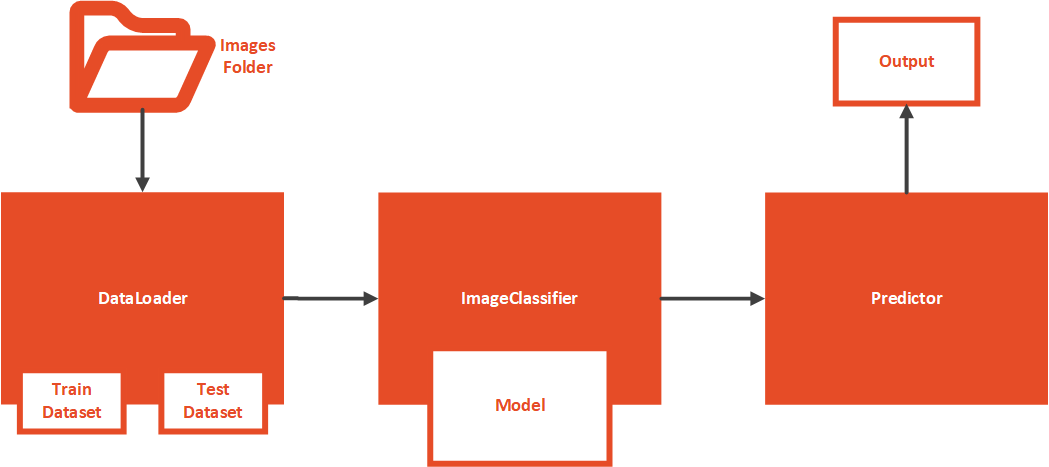

Before we dive deeper into this implementation, let’s consider the high-level architecture of this implementation. Here we build solution, that allows us to quickly change the artchitecture that we use for image classification. The idea is to separate differents partrs of application based on the work they do. The folder structure of our solution looks like this:

The DataModel folder contains classes that model the data. The DataLoader class loads images from the folder, pre-process it and splits into Training and Test datasets. The ImageClassifier class wraps the model. It uses data provided by the DataLoader to train the model. Finally, the Predictor class uses the test dataset and train model to make predictions. The architectural overview can be represented like this:

5.2 Data Models

In order to load data from the images and use it with ML.NET algorithms, we need to implement classes that are going to model this data. Three files can be found in Data Folder: ImageData, ModelInput and ModelOutput. The InputData class contains basic information of the image:

namespace ImageClassification.MachineLearning.DataModels

{

public class ImageData

{

public string ImagePath { get; set; }

public string Label { get; set; }

}

}

This class contains image path and the label. The label information is extracted from the filename.

The ModelInput class models output data that is used as an input to the model:

using System;

namespace ImageClassification.MachineLearning.DataModels

{

public class ModelInput

{

public byte[] Image { get; set; }

public UInt32 LabelAsKey { get; set; }

public string ImagePath { get; set; }

public string Label { get; set; }

}

}The Image property is the byte array and byte representation of the image. The model expects image data to be of this type for training. The LabelAsKey is the encoded value of the Label.

Finaly, the ModelOutput represents output of the model:

namespace ImageClassification.MachineLearning.DataModels

{

public class ModelOutput

{

public string ImagePath { get; set; }

public string Label { get; set; }

public string PredictedLabel { get; set; }

}

}5.3 Data Loader

The DataLoader is the class that loads data from the images folder and pre processes them. It has three public properties which exposes different dataset. In essence, all magic is happening within the constrictor of the class, but with the help of different private methods. The constructor just uses training folder path to do it all. First we initialize necessary fields and then we use methods to build data processing pipeline and pre-process the data:

using ImageClassification.MachineLearning.DataModels;

using Microsoft.ML;

using Microsoft.ML.Data;

using System.Collections.Generic;

using System.IO;

namespace ImageClassification.MachineLearning

{

public class DataLoader

{

private readonly MLContext _mlContext;

private string _trainingFolder;

public IDataView TrainSet { get; private set; }

public IDataView ValidationSet { get; private set; }

public IDataView TestSet { get; private set; }

public DataLoader(string trainingFodler)

{

_trainingFolder = trainingFodler;

_mlContext = new MLContext(111);

var dataProcessPipeline = BuildDataProcessingPipeline();

LoadAndPrepareData(dataProcessPipeline);

}

/// <summary>

/// Feature engeneering and data pre-processing.

/// </summary>

/// <returns>Data Processing Pipeline.</returns>

private EstimatorChain<ImageLoadingTransformer> BuildDataProcessingPipeline()

{

var dataProcessPipeline = _mlContext.Transforms.Conversion.MapValueToKey(

inputColumnName: "Label",

outputColumnName: "LabelAsKey")

.Append(_mlContext.Transforms.LoadRawImageBytes(

outputColumnName: "Image",

imageFolder: _trainingFolder,

inputColumnName: "ImagePath"));

return dataProcessPipeline;

}

private void LoadAndPrepareData(EstimatorChain<ImageLoadingTransformer> dataProcessPipeline)

{

IEnumerable<ImageData> images = LoadImages(_trainingFolder);

var imageData = _mlContext.Data.LoadFromEnumerable(images);

var shuffledImageData = _mlContext.Data.ShuffleRows(imageData);

var preparedData = dataProcessPipeline

.Fit(shuffledImageData)

.Transform(shuffledImageData);

var trainSplit = _mlContext.Data.TrainTestSplit(data: preparedData, testFraction: 0.3);

var validationTestSplit = _mlContext.Data.TrainTestSplit(trainSplit.TestSet);

TrainSet = trainSplit.TrainSet;

ValidationSet = validationTestSplit.TrainSet;

TestSet = validationTestSplit.TestSet;

}

private IEnumerable<ImageData> LoadImages(string trainingFolder)

{

var files = Directory.GetFiles(trainingFolder, "*",

searchOption: SearchOption.AllDirectories);

foreach (var file in files)

{

if ((Path.GetExtension(file) != ".jpg") && (Path.GetExtension(file) != ".png"))

continue;

var label = Path.GetFileName(file);

label = label.Substring(0, 3);

yield return new ImageData()

{

ImagePath = file,

Label = label

};

}

}

}

}

The BuildDataProcessingPipeline method created a pipeline to encode the label and loads image as bytes from defined folder. This pipeline is then used in LoadAndPrepareData. Essentially, this method does all the heavy-lifting in this class. First it calls LoadImages method, which creates labeles for all images in the training folder. Than it shuffles the data and runs it throught the pipeline. Finally it splits data into three datasets.

5.4 Image Classifier

The ImageClassifier class is the class that trains the model. The instance of DataLoader class is injected in this class because it contains necessary datasets. Apart from that, this class receives type of architecture as an input. This is done using enumeration ImageClassificationTrainer.Architecture.

using ImageClassification.MachineLearning.DataModels;

using Microsoft.ML;

using Microsoft.ML.Data;

using Microsoft.ML.Transforms;

using Microsoft.ML.Transforms.Image;

using Microsoft.ML.Vision;

using System;

using System.Collections.Generic;

using System.IO;

namespace ImageClassification.MachineLearning

{

public class ImageClassifier

{

private static string ModelPath => Path.Combine(AppContext.BaseDirectory,

"imageClassification.mdl");

private readonly MLContext _mlContext;

private ImageClassificationTrainer.Architecture _architecture;

private DataLoader _dataLoader;

private ITransformer _trainedModel;

public ImageClassifier(ImageClassificationTrainer.Architecture architecture,

DataLoader dataLoader)

{

_mlContext = new MLContext(111);

_architecture = architecture;

_dataLoader = dataLoader;

}

/// <summary>

/// Train model on defined data.

/// </summary>

/// <param name="trainingFileName"></param>

public void Fit()

{

var trainingPipeline = BuildTrainingPipeline();

_trainedModel = trainingPipeline.Fit(_dataLoader.TrainSet);

}

/// <summary>

/// Save Model in the file.

/// </summary>

public void Save()

{

_mlContext.Model.Save(_trainedModel, _dataLoader.TrainSet.Schema, ModelPath);

}

private EstimatorChain<KeyToValueMappingTransformer> BuildTrainingPipeline()

{

var classifierOptions = new ImageClassificationTrainer.Options()

{

FeatureColumnName = "Image",

LabelColumnName = "LabelAsKey",

ValidationSet = _dataLoader.ValidationSet,

Arch = _architecture,

MetricsCallback = (metrics) => Console.WriteLine(metrics),

TestOnTrainSet = false,

ReuseTrainSetBottleneckCachedValues = true,

ReuseValidationSetBottleneckCachedValues = true,

Epoch = 20

};

return _mlContext.MulticlassClassification

.Trainers

.ImageClassification(classifierOptions)

.Append(_mlContext.Transforms

.Conversion.MapKeyToValue("PredictedLabel"));

}

}

}The most important method in this class is BuildTrainingPipeline. This method defines the model that is used for training. Notice the Options object that we create. We define everything here, from the number of epochs to the architecture that is used. This methid is called within the Fit method, the only public method in the class. This method trains and saves the model.

5.5 Predictor

The Predictor class is here to load the saved model and run some predictions. Usually, this class is not a part of the same microservice as trainers. We usually have one microservice that is performing the training of the model. This model is saved into file, from which the other model loads it and run predictions based on the user input. Here is how this class looks like:

using ImageClassification.MachineLearning.DataModels;

using Microsoft.ML;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

namespace ImageClassification.MachineLearning

{

public class Predictor

{

private static string ModelPath => Path.Combine(AppContext.BaseDirectory,

"imageClassification.mdl");

private MLContext _mlContext;

private PredictionEngine<ModelInput, ModelOutput> _predictionEngine;

private ITransformer _trainedModel;

private DataLoader _dataLoader;

public Predictor(DataLoader dataLoader)

{

_mlContext = new MLContext(111);

if (!File.Exists(ModelPath))

{

throw new FileNotFoundException($"File {ModelPath} doesn't exist.");

}

using (var stream = new FileStream(ModelPath,

FileMode.Open,

FileAccess.Read,

FileShare.Read))

{

_trainedModel = _mlContext.Model.Load(stream, out _);

}

if (_trainedModel == null)

{

throw new Exception($"Failed to load Model");

}

_predictionEngine = _mlContext.Model

.CreatePredictionEngine<ModelInput, ModelOutput>(_trainedModel);

_dataLoader = dataLoader;

}

public IEnumerable<ModelOutput> MakeTestDatasetPredictions()

{

var predictionData = _trainedModel.Transform(_dataLoader.TestSet);

return _mlContext.Data

.CreateEnumerable<ModelOutput>(predictionData, reuseRowObject: true).Take(10);

}

}

}

In a nutshell, the model is loaded from a defined file, and predictions are run on the 10 images from the Test Dataset.

5.6 Usage and Results

Ok, let’s put all of this together.

using ImageClassification.MachineLearning;

using Microsoft.ML.Vision;

using System;

using System.IO;

namespace ImageClassification

{

class Program

{

static void Main(string[] args)

{

var dataLoader = new DataLoader(".\\dogs-vs-cats\\train");

var trainer = new ImageClassifier(

ImageClassificationTrainer.Architecture.MobilenetV2, dataLoader);

trainer.Fit();

var predictor = new Predictor(dataLoader);

Console.WriteLine("Make predictions on test dataset:");

var predictions = predictor.MakeTestDatasetPredictions();

foreach (var prediction in predictions)

{

string imageName = Path.GetFileName(prediction.ImagePath);

Console.WriteLine($"Image: {imageName} | Actual Value: {prediction.Label} | Predicted Value: {prediction.PredictedLabel}");

}

}

}

}

Here e first create instance of the DataLoader class, using the path to the image folder. Then we create instance of the Image Classifier. We use MobileNetV2 architecture to do so. After that we call Fit method to train this model. Finally we create Predictor object and run predictions. Here is what we get as an output:

....

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17533

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17534

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17535

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17536

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17537

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17538

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17539

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17540

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17541

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 17542

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 0, Accuracy: 0.98801804, Cross-Entropy: 0.036682364

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 1, Accuracy: 0.9871306, Cross-Entropy: 0.03759807

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 2, Accuracy: 0.9874266, Cross-Entropy: 0.038243674

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 3, Accuracy: 0.98624307, Cross-Entropy: 0.039044067

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 4, Accuracy: 0.9859473, Cross-Entropy: 0.03948401

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 5, Accuracy: 0.98639095, Cross-Entropy: 0.040133394

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 6, Accuracy: 0.986243, Cross-Entropy: 0.040519208

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 7, Accuracy: 0.98668677, Cross-Entropy: 0.041076425

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 8, Accuracy: 0.98639095, Cross-Entropy: 0.04141879

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 9, Accuracy: 0.98639077, Cross-Entropy: 0.041892104

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 10, Accuracy: 0.98639077, Cross-Entropy: 0.04219451

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 11, Accuracy: 0.98639077, Cross-Entropy: 0.042598486

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 12, Accuracy: 0.98653877, Cross-Entropy: 0.042873375

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 13, Accuracy: 0.9862429, Cross-Entropy: 0.04322276

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 14, Accuracy: 0.98639077, Cross-Entropy: 0.04347997

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 15, Accuracy: 0.98639077, Cross-Entropy: 0.04378552

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 16, Accuracy: 0.9862429, Cross-Entropy: 0.044029992

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 17, Accuracy: 0.986095, Cross-Entropy: 0.0442991

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 18, Accuracy: 0.9862429, Cross-Entropy: 0.04453304

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 19, Accuracy: 0.98639077, Cross-Entropy: 0.04477104

Phase: Training, Dataset used: Validation, Batch Processed Count: 676, Epoch: 20, Accuracy: 0.9862429, Cross-Entropy: 0.044995066

Make predictions on test dataset:

Image: cat.10541.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10386.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10119.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10114.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10332.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10266.jpg | Actual Value: cat | Predicted Value: dog

Image: cat.11038.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.11025.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.1107.jpg | Actual Value: cat | Predicted Value: cat

Image: cat.10110.jpg | Actual Value: cat | Predicted Value: catNotice that for all ten images we got the good predictions.

Conclusion

In this article, we explored the world of Transfer Leraning. We focused on image classification problem. We learned about some cool architectures and saw how we can use them with ML.NET.

Thank you for reading!

Nikola M. Zivkovic

CAIO at Rubik's Code

Nikola M. Zivkovic a CAIO at Rubik’s Code and the author of book “Deep Learning for Programmers“. He is loves knowledge sharing, and he is experienced speaker. You can find him speaking at meetups, conferences and as a guest lecturer at the University of Novi Sad.

Rubik’s Code is a boutique data science and software service company with more than 10 years of experience in Machine Learning, Artificial Intelligence & Software development. Check out the services we provide.

Trackbacks/Pingbacks