At the end of every month, we decipher three research papers from the fields of machine learning, deep learning and artificial intelligence, that left the biggest impact on us that month. Apart from that, at the end of the article, we add links to other papers that we have found interesting but were not in our focus that month. So, you can check those as well. Here are the links from the previous months:

In general, we try to present papers that are going to leave a big impact on the future of machine learning and deep learning. We believe that these proposals are going to change the way we do our jobs and push the whole field forward. Have fun!

We don’t do sales, but given the circumstances and the severity of the situation, we decided to change that. Don’t be fooled, this sale isn’t meant for profit and it’s most definitely not planned. This sale is here to help people who want to become better, learn new skills and be more productive than ever before. Our book offers are on a 50% sale.

YOLOv4: Optimal Speed and Accuracy of Object Detection

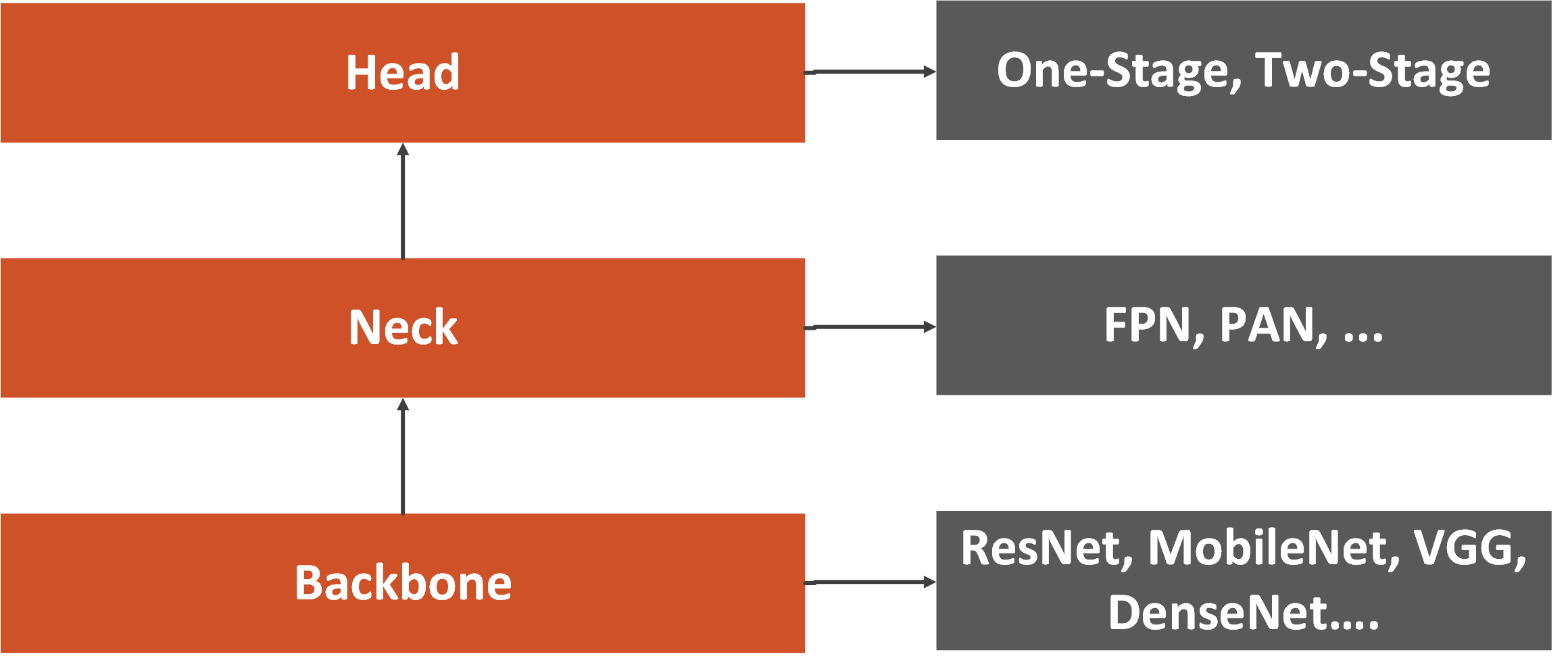

Every time there is a new version of YOLO, there is a small celebration among engineers that work on computer vision problems. YOLO or You Only Look Once is a real-time object-detection neural network. It is quite neat and quick. This new improved version of YOLO addresses problems of Convolutional Neural Networks that operate in real-time on a conventional GPU. The paper focuses on developing an efficient, powerful, and high-accuracy object-detection model that can be quickly trained on standard GPU. Essentially, the object-detection neural network is usually composed of three parts. The authors named them backbone, neck and head. Backbone is usually deep architecture that was pre-trained on the ImageNet dataset without top layers as we use for transfer learning.

This can be any of the “large networks” like ResNet, DenseNet, a variation of VGG, or MobileNet if you plan to run your object-detection model on CPU. The neck is usually composed of several layers whose goal is to collect feature maps from different stages. This may be Feature Pyramid Network or maybe Path Aggregation Network. Finally, the head is a part of the object detection model that is used for the prediction of custom classes and drawing bounding boxes around objects. Based on the type of the head, we distinguish two types of object-detection models :

- One-stage object-detection models – Detect class of the object directly from the image

- Two-stage object-detection models – First detect a region of interest (image-segmentation), and then use this region classification.

Authors introduce two terms Bag of freebies (BOF) and Bag of specials (BOS). Bag of freebies refers to the methods that affect training strategy. One such method is data augmentation, which is used to increase the variability of the input images and make the model has higher robustness. Other methods that could be considered as Bag of freebies are random erase, CutOut, grid mask, DropOut, DropConnect, etc. All these methods temper with the input images and/or feature maps and remove bias from input data. Finally, Bag of freebies could be some objective functions like Bounding Box (BBox). Bag of specials is post-processing modules and methods that do increase the inference cost but improve the accuracy of object detection as well. These can be any methods enhancing certain features of a model. For example, that can be enlarging receptive field, introducing attention mechanism, or strengthening feature integration capability, etc.

Based on all of these, the architecture of YOLOv4 consists of the following parts:

• Backbone: CSPDarknet53 – Cross Stage Partial Network minimizing required heavy inference computations from the network architecture perspective.

• Neck: Spatial Pyramid Pooling – SPP (so object-detector can receive images of arbitrary size/scale) and Path Aggregation Network – PAN (boosting information flow in proposal-based instance segmentation framework)

• Head: YOLOv3

• Bag of Freebies (BoF) for backbone: CutMix and Mosaic data augmentation, DropBlock regularization, Class label smoothing

• Bag of Specials (BoS) for backbone: Mish activation, Cross-stage partial connections (CSP), Multi input weighted residual connections (MiWRC)

• Bag of Freebies (BoF) for detector: CIoU-loss, CmBN, DropBlock regularization, Mosaic data augmentation, Self-Adversarial Training, Eliminate grid sensitivity, Using multiple anchors for single ground truth, Cosine annealing scheduler, Optimal hyperparameters, Random training shapes

• Bag of Specials (BoS) for detector: Mish activation, SPP-block, SAM-block, PAN path-aggregation block, DIoU-NMS

The result is the state-of-the-art object-detector that is faster than any alternative. The YOLOv4 results are located on the Pareto optimality curve and are superior to the fastest and most accurate detectors in terms of both speed and accuracy as you can see in the image above.

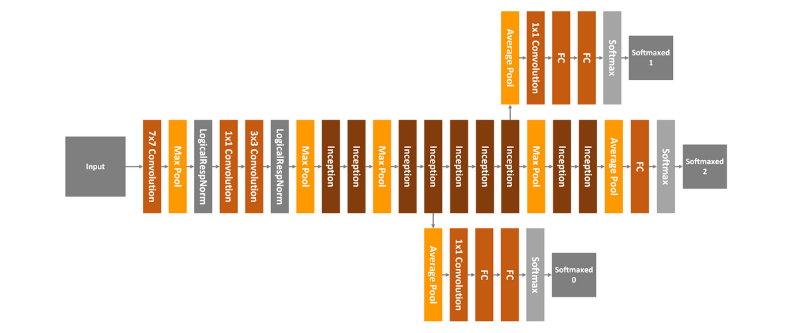

ResNeSt: Split-Attention Networks

Ok, this is a second computer vision paper that caught our undivided attention (ba dam tus!). We already mentioned that image classification and object detection solutions use some deep convolutional networks (CNN) as a backbone and then build on top of that. This is done because learning low-level features, like curves and lines, is already done (in a better way) and we don’t need to do it again. One of the popular base architectures that are used is ResNet or some variation of it. They are simple, modular, and provide really good results. However, in order to improve the accuracy of a ResNet backbone architecture, we often need to do some kind of modifications within architecture itself.

Another impact on the accuracy of image classification models is improved with large scale neural architecture search – NAS. However, even though these NAS-derived models are having high accuracy their usage is limited. Essentially, they are not optimized for training efficiency, memory usage and processing on commercial hardware. The authors of this paper build on top of ResNet architecture and add Self-Attention blocks to it. As a result, a novel architecture called ResNeSt, is created. The goal was to create CNN backbone architecture for transfer learning that could be easily trainable and more robust. In a nutshell, feature-map split attention blocks are used instead of classical residual blocks. These attention blocks divide the feature-map into several groups and finer-grained subgroups. The feature representation of each group is then determined via a weighted combination of the representations of its splits. Here is what proposed Self-Attention Block looks like:

Every feature can be divided into several groups, driven by the hyperparameter K. This hyperparameter defines the number of feature-map groups, which are also called – cardinal groups. Another important hyperparameter is R, which defines the number of splits within a cardinal group itself. This means that number of cardinal groups is G = KR. On each individual group, the number of transformations may be applied and combined representation is calculated by applying element-wise summation across multiple splits. Then global average pooling is applied. Finally, the cardinal group representations are then concatenated along the channel dimension.

Apart from the Self-Attention block, additional changes on the ResNet architecture are also applied. Instead of using strided 1×1 convolutional blocks, authors used average pooling 3 × 3 layers. This way spatial information is preserved. Apart from that, the first 7 × 7 convolutional layer is replaced with three 3 × 3 convolutional layers, based on the propositions from this paper. Finally, 2 × 2 average pooling layer is added to the shortcut connection prior to the 1 × 1 convolutional layer. The resulting novel architecture boosts performance across image classification, object detection, instance segmentation and semantic segmentation.

Training with Quantization Noise for Extreme Model Compression

Today’s neural network architectures can have a huge memory footprint. For example, ResNet50 architecture that we mentioned earlier has over 23 million trainable parameters. This makes their usage in embedded systems somewhat limited. There are several techniques that can help to reduce the size of the network. Various pruning and distillation techniques remove parameters by reducing the number of network weights, or model compression techniques that reduce memory footprint of the model. One of the interesting techniques, that is used in this paper is quantization. The aim of this technique is to reduce the number of bits per weight, which means that one can further optimize the model even if it is already optimized the neural network architecture point.

These methods essentially compress the weights matrix W by assigning to each block bkl an index that points to a codeword c in a codebook C. Then they store the codebook C and the resulting indices instead of the real weights. Finally, during the inference, they reconstruct an approximation of the original matrix W using stored information. However, quantization techniques used thus far made approximations that induced a significant drop in performance. That is why the authors proposed quantization during the training process using quantization noise. They rely on already proven techniques like Quantization Aware Training and their approach can work with the various quantization methods. Another cool thing about it is that it can be applied to trained networks as a post-processing step.

In the core of the proposed solution lies the Quant-Noise technique that is applied only to a random subset of weights during training. Using this technique in combination with Product Quantizer gives us the best trade-off between accuracy and model size. Product Quantization is a method that works on groups of weights. It splits each column of the weights matrix W into m subvectors and learns the same codebook for each of the resulting subvectors.

So, the whole thing works something like this:

– Pick the quantization method that is used.

– Calculate blocks bkl related to the quantization method.

– During each forward pass randomly select a subset of these blocks.

– Apply some noise to them.

– During the backward pass compute gradients for all the weights, using a straight-through estimator (STE) for the distorted weights.

In the paper, you can see how noise is applied to different quantization techniques. The results are really interesting, proving that this approach provides high compression rates at a reasonable cost in accuracy.

Other Amazing Papers from this Month

- ASL Recognition with Metric-Learning based Lightweight Network

- Lossless Image Compression through Super-Resolution

- Probabilistic Regression for Visual Tracking

- Towards Discriminability and Diversity: Batch Nuclear-norm Maximization under Label Insufficient Situations

- Dynamic R-CNN: Towards High-Quality Object Detection via Dynamic Training

Conclusion

In this article, we had a chance to real about three really cool papers that, as we see it, are going to change the way we perform our jobs. First, we saw new and improved YOLO. Then we explored the new ResNeSt architecture. Finally, we saw how we can improve model compression using Quant-Noise. Did you have any favorites this month? Let us know.

Thank you for reading!

Nikola M. Zivkovic

CAIO at Rubik's Code

Nikola M. Zivkovic a CAIO at Rubik’s Code and the author of book “Deep Learning for Programmers“. He is loves knowledge sharing, and he is experienced speaker. You can find him speaking at meetups, conferences and as a guest lecturer at the University of Novi Sad.

Rubik’s Code is a boutique data science and software service company with more than 10 years of experience in Machine Learning, Artificial Intelligence & Software development. Check out the services we provide.