In the literature, it is often mentioned how deep neural networks are modeled by biological nervous systems. The goal, after all, is to give the ability to a computer to solve certain tasks by processing data, not by being explicitly programmed. To precive things, so to say.

However, this is not entierly true. We may say that different types of tasks require a specific neural network. They are not general and they depend on the type of data that is being processed. For example, you would use different neural networks for processing images (e.g. Convolutional Neural Network, ResNet, etc.) and different for processing audio (e.g. LSTM, RNN, 1D Convolutions).

Because every network is built for individual purposes, they rely on different priors. These priors are good, they bring needed information and biases using which networks can learn, however they limit neural networks to a specific purpose.

This bundle of e-books is specially crafted for beginners.

Everything from Python basics to the deployment of Machine Learning algorithms to production in one place.

Become a Machine Learning Superhero TODAY!

New supermodel (not that kind of supermodel you silly) that was proposed by authors from Deep Mind (full paper – Perceiver: General Perception with Iterative Attention) aims to solve this problem and bring these systems closer to real biological systems that perceive the world around them by simultaneously processing different signals at once.

This new network is based on Transformer architecture and it is called Perceiver. Google’s AI lead Jeff Dean has described it as one model that could handle any task, and “learn” faster, with fewer data.

In this article we cover:

1. Why is the Perceiver Important?

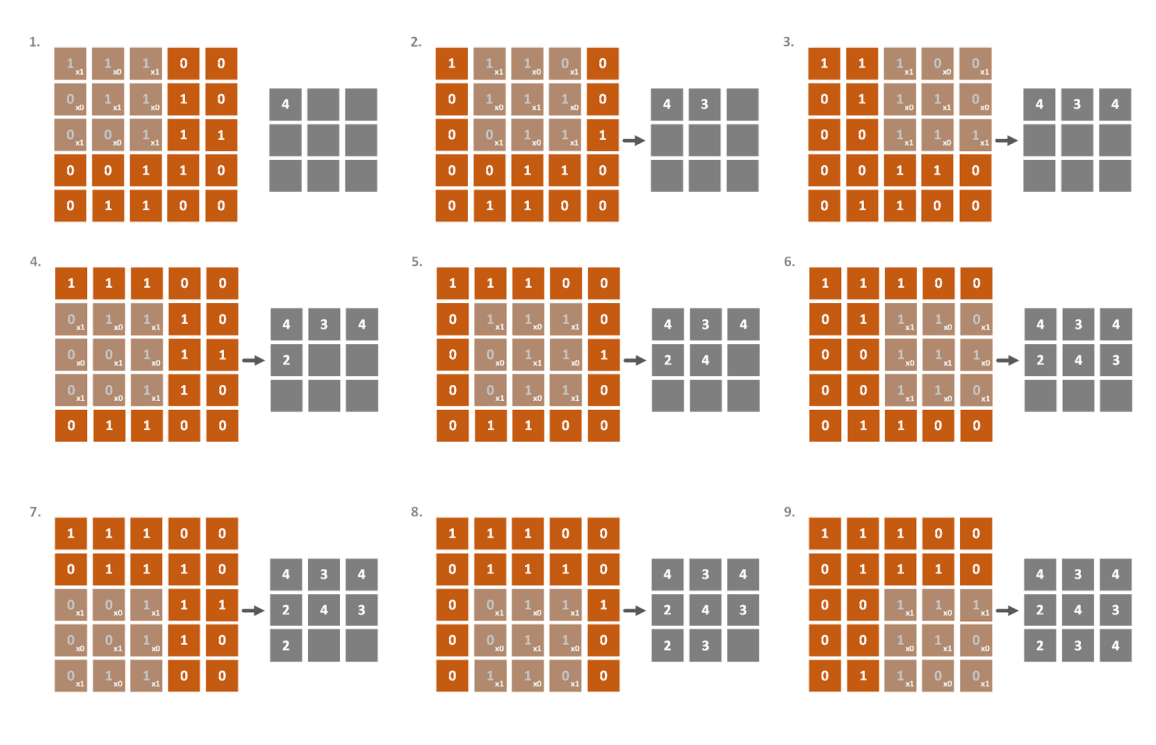

We could say that the world of machine learning and deep learning is crossing another threshold with Perceiver. In order to explain what improvements Perceiver is making, let’s take for example an image processing pipeline with CNN. This pipeline assumes that the image is some sort of a grid, composed of pixels. Every pixel has its coordinate in the image.

Also, these networks assume that each pixel in the image is related to the other pixels in the image. After all, this is how computer vision algorithms worked even before the deep learning boom in 2012. That is why we slide various filters (and their weights) over an image to build their receptive fields. This means that the relation between the pixels is sort of built into the architecture of the network.

In a similar way, Vision Transformers (ViT) would split images into sections and then create vectors of each section of pixels. This is done because images are too large to be put into Transformers directly. So, in a way, this correlation between pixels is built into this architecture as well. This is not just the case with computer vision tasks, but with audio processing tasks, and so on. This is what the authors of the paper mean when they say that this information “lock models to different modalities”.

This is the first thing that Perceiver is trying to solve. The Perceiver tries to decouple the architecture of the network from the data it processes. The logic is that we should move forward and build deep learning systems that are agnostic of the type of data they process. Another problem that Perceiver is aiming to solve is the bottleneck of the Transformer since it is a Transformer-based architecture. As we already mentioned even Visual Transformer architecture splits images into smaller sections, because images are generally to large for Transformers.

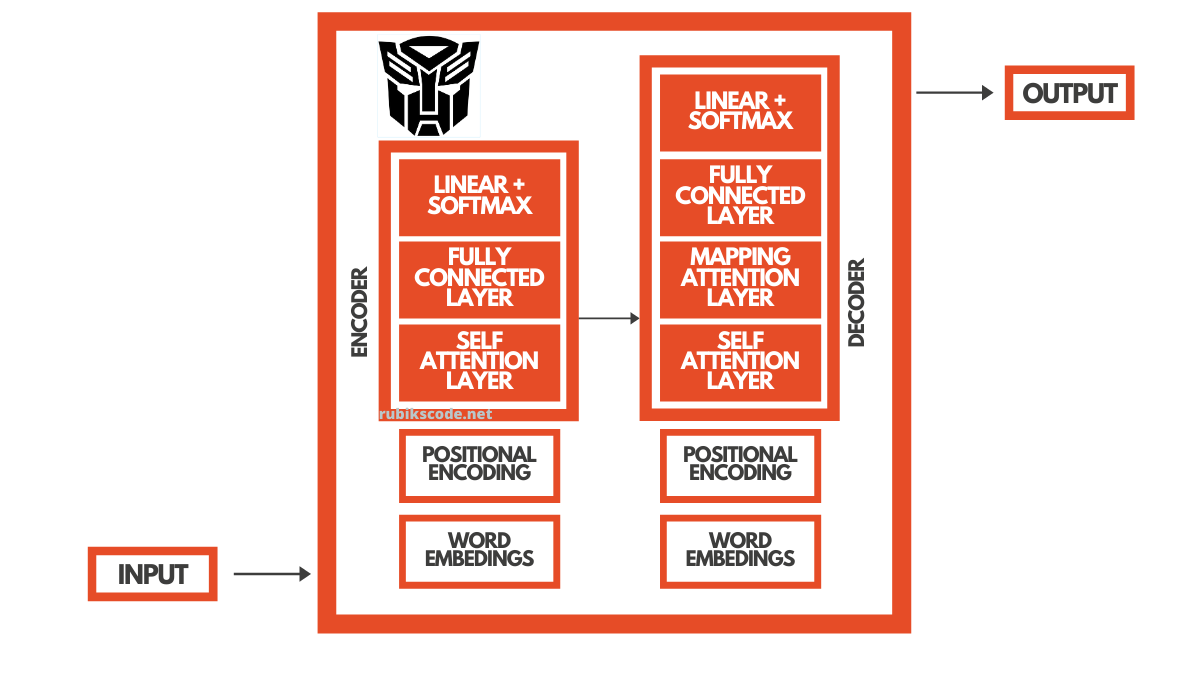

This is largely due to the fact that Transformers are sequence-to-sequence architectures, initially built for text processing and NLP. Transformers use so-called self-attention to transfer a sequence of elements into another sequence of elements, like for example, translate an English sentence into a French sentence.

Because the magnitude of processed inputs in the text is much smaller than the one of the image, this is usually referred to as a Transformer bottleneck or quadratic scaling problem. Perceiver aims to solve this problem too, and asks “Can we be smarter when calculating attention?“. So let’s take a look at Perceiver Architecture and find out how it looks like.

2. Perceiver Architecture

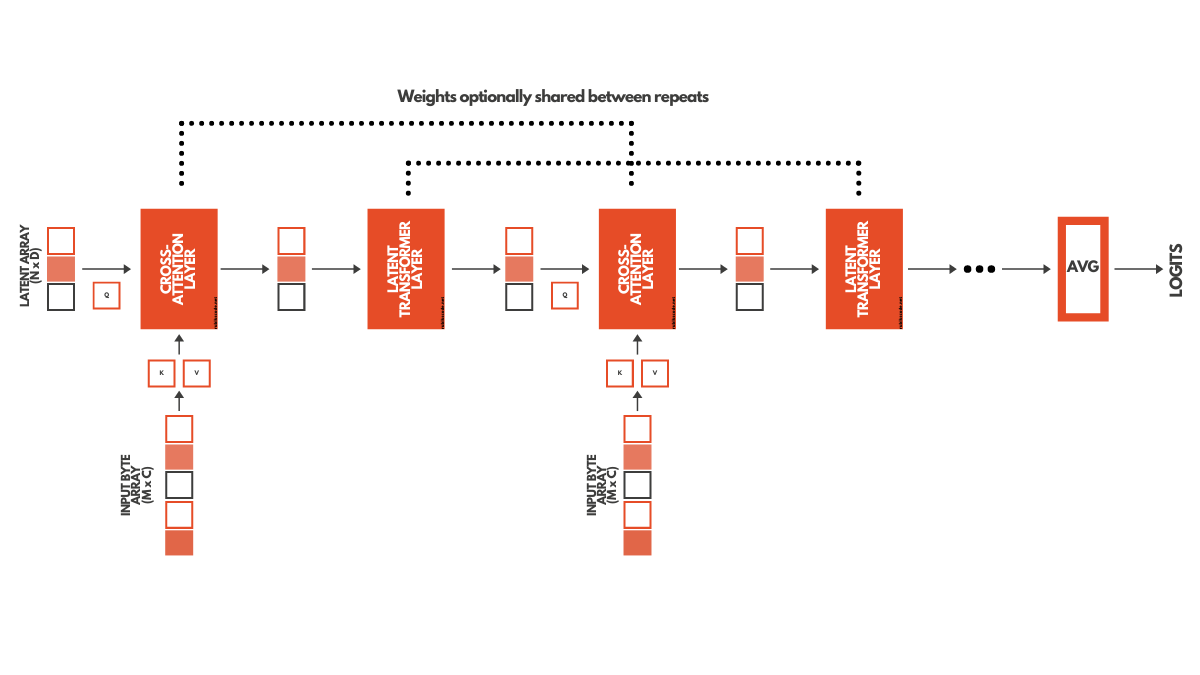

The authors of Perceiver from DeepMind had a couple of really interesting ideas and combined some old and new concepts with one neat hack. In an essence, Perceiver is composed of two types of layers: The Cross-Attention layer and the Latent Transformer layer.

The idea is to utilize Cross-Attention Layers (we will see in a bit what they are) to compress the input data into latent space vectors that can be processed by Latent Transformer layers. So, technically, we may say that the real goal of the Perceiver was to solve the Transformer bottleneck and that brought a certain level of data agnosticism. Ok, here is how that architecture looks like:

2.1 One Perceiver to Rule Them All

The original Transformer paper changed the world with the concept of attention (after all Attention is all you need). This concept is structured around the use of query-key-value (QKV) attention. Essentially, attention uses three multi-layer perceptrons (MLPs) – the query, key, and value networks, on each element of an input array.

The output is three arrays (vectors) that preserve the sequence length) of the input. As you can see in the image above, every Cross-Attention Layer uses input data to calculate attention vectors K and V. Final Attention Vector Q comes from previously learned latent vector. Even though this vector, in the beginning, can be randomly initialized, my guess is that DeepMind has some pre-learned latent vectors that they utilize here, at least in the beginning.

Q, V, and K are then used to provide latent space vectors. These vectors are not big as in standard Transformer, which means that they can be processed by the Transformer layer without a problem. If this whole thing reminds you of Recurrent Neural Networks (RNNs) that is because Perceiver is technically an RNN but unrolled in depth using the same input, rather than in time.

The key is that the same input is always used for learning attention vectors. This vector can be an image with pixels roller out in the shape of a 1D vector, or it can be a similarly processed audio signal. And that is the beauty of this solution. It doesn’t matter what.

2.2 Cross-Attention Perceiver Layers

The Cross-Attention layers are the beauty of the Perceiver approach. They address the problem of scaling attention-like architectures to large and generic inputs. It is kind of a hack too, so I expect that this solution will be improved in some of the future. If we denote the input dimensionality as M, the complexity of the QKV attention operation is O(M²). However, if we use cross-attention, K and V are just projections of the input, while the Q is a projection of a learned latent vector. The dimension of this latent vector is N, and we consider that N << M. That way the complexity of attention operation is reduced to O(MN).

2.3 Latent Transformer Perceiver Layers

Transformer layers of Perceiver are the same as the ones in GPT-2 architecture. These are based on the decoder of the original Transformer architecture. Because Cross-Attention introduces lower dimensionality latent vectors, which technically contain the same information, Perceiver is able to utilize deeper Transformers without relying on domain-specific assumptions.

2.4 Positional Encoding in Perceiver

If you are familiar with the original Transformer paper, you know that Positional Encoding is very important for it. This means that the order of the elements in the input sequence is quite important. This makes sense since NLP tasks are dealing with the text and the position of the word in a sentence is very important. The same goes for the Perceiver too. The difference is that Perceiver uses Fourier feature position encodings. Only mentioning Fourier brings me back to my colleague days 🙂 The truth is however that this technique gained usage in previous years.

3. Perceiver Results

In the paper, results from three datasets and three tasks are considered:

- ILSVRC 2012 split of the ImageNet dataset for single image classification

- AudioSet dataset for audio event classification in video

- ModelNet40 is a strange dataset composed of point clouds derived from 3D triangular meshes spanning 40 object categories. The task is to predict the class of each object, given the coordinates of ∼ 2000 points in 3D space.

3.1 Perceiver Image Classification Results

The results on the Image classification are stunning. They show that the Perceiver performs better than ResNet-50 in the ImageNet Dataset without relying on domain-specific assumptions. This.is.wild. Even more fascinating are the results on the permutated ImageNet dataset. This dataset is composed of permutations that are done after position features are created. This is done to show how Convolutional based networks are not able to utilize learned features in the way that the Perceiver are. And the result is mind-blowing.

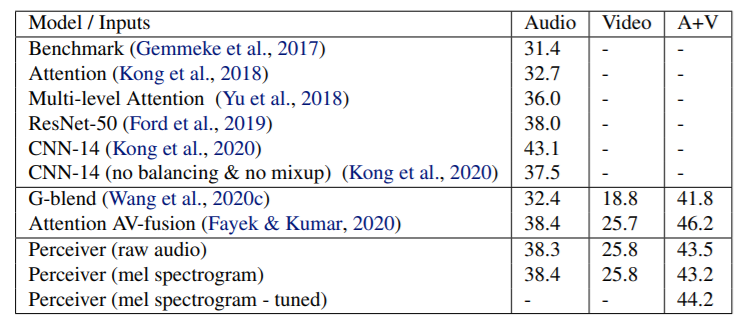

3.2 Perceiver Audio+Video Results

The Perceiver obtains near-state-of-the-art results on both video and audio experiments. On audio tasks, the Perceiver performs better than most ConvNet models except CNN-14 with improvements. Most of the mentioned approaches use histograms, but the Perceiver uses just raw audio. Again, that is beyond fascinating. Video results are similar, however, the Provider performs worse than the state-of-the-art approaches that use separate models with late combinations for audio+video tasks.

3.3 Perceiver Point-Cloud Results

The Perceiver performed better than ResNet and variations of the visual transformer. It however didn’t perform better than PointNet++, specialized neural network which performs additional transformations that were not considered by the authors.

Conclusion

The Perceiver is one giant step forward. Although there is much more to be done here, the achievements and the results are astonishing. The interesting point is definitely that the perceiver empirically proves that if we are able to stack enough transformers together, networks will be able to learn everything. It seems that the future really belongs to those who pay more attention.

Thank you for reading!

This bundle of e-books is specially crafted for beginners.

Everything from Python basics to the deployment of Machine Learning algorithms to production in one place.

Become a Machine Learning Superhero TODAY!

Nikola M. Zivkovic

Nikola M. Zivkovic is the author of books: Ultimate Guide to Machine Learning and Deep Learning for Programmers. He loves knowledge sharing, and he is an experienced speaker. You can find him speaking at meetups, conferences, and as a guest lecturer at the University of Novi Sad.

Trackbacks/Pingbacks