Well, this year was weird and full of challenges for all of us. Pandemic reshaped our world and it seems that this trend is going to go even further in 2021. The field of AI, however, was not impacted that much by all these changes. At least not in a negative way. A steady flow of research papers was not stopped, in fact, we saw some quite amazing breakthroughs, with the GPT-3 being the most notable. In this article, we present 15 research papers from this year, that we think you should read.

In general, we try to present papers that are going to leave a big impact on the future of machine learning and deep learning. We believe that these proposals are going to change the way we do our jobs and push the whole field forward.

Are you afraid that AI might take your job? Make sure you are the one who is building it.

STAY RELEVANT IN THE RISING AI INDUSTRY! 🖖

Here is the list and overview of machine learning, deep learning and artificial intelligence research papers, which left an impact on us in 2020:

- The Tree Ensemble Layer: Differentiability meets Conditional Computation

- Stanza: A Python Natural Language Processing Toolkit for Many Human Languages

- YOLOv4: Optimal Speed and Accuracy of Object Detection

- ResNeSt: Split-Attention Networks

- Training with Quantization Noise for Extreme Model Compression

- End-to-End Object Detection with Transformers

- Language Models are Few-Shot Learners

- AdaBelief Optimizer: Adapting Stepsizes by the Belief in Observed Gradients

- Unsupervised Translation of Programming Languages

- DeepFaceDrawing: Deep Generation of Face Images from Sketches

- PP-YOLO: An Effective and Efficient Implementation of Object Detector

- Learning to Match Distributions for Domain Adaptation

- PyTorch Metric Learning

- TensorFlow Quantum: A Software Framework for Quantum Machine Learning

- The Microsoft Toolkit of Multi-Task Deep Neural Networks for Natural Language Understanding

The Tree Ensemble Layer: Differentiability meets Conditional Computation

It is an understatement to say that this paper blew us away. It is probably the most elegant solution we read this the whole year. The authors of this paper propose one very interesting merging of two very popular and successful machine learning approaches. In a nutshell, they incorporate decision trees within the neural network structure and try to eliminate the flaws of both approaches. If you are a machine learning engineer, chances are that you have used decision trees ensembles in your life once or twice. They give good results in various applications and are considered to be the best out-of-the-box learners. One of their features that we are interested in is conditional computation, which refers to their ability to route each sample through a small number of nodes. This feature is specifically important because this enables these models to activate only a fraction of their architecture, which leads to both statistical and computational benefits.

However, as any classical machine learning approach, their performance heavily depends on feature engineering. On the other hand, neural networks earned their good name exactly because of their ability to perform feature engineering on their own. They have a good mechanism for representation learning, but they are harder to tune and they don’t support conditional computation. That is where the idea for combining these two approaches originated. The authors propose Tree Ensemble Layer (TEL) for neural networks. This layer is an additive model of differentiable decision trees and it is trained along with gradient-based optimization methods like stochastic gradient descend. TEL is accompanied by new sparse activation function for sample routing and specialized forward and backward propagation algorithms.

To be precise, this algorithm uses improved soft trees, which are a variant of decision threes that perform soft routing. Standard decision trees perform hard routing – a sample is routed to exactly one direction at every internal node. This approach is bad for optimization. In the soft routing approach, a sample can be routed left and right simultaneously with different proportions. This makes soft trees differentiable, meaning that we can apply stochastic gradient descend to them, but doesn’t support conditional computation because they cant route strictly to the left or strictly to the right. That is why a new activation function is introduced – smooth-step activation function, which can output exact zeroes and ones, unlike the Sigmoid function which is often used in soft decision trees.

This approach, of course, requires some changes in the forward and back propagation tasks and involvement of conditional computation into it. The algorithm for forward pass looks like this:

While the modified algorithm for backpropagation looks like this:

In the multiple experiments with 23 datasets this approach got 10x speed up against decision trees algorithms.

Stanza: A Python Natural Language Processing Toolkit for Many Human Languages

Ok, we tried to write an objective review of this paper, ie. of this toolkit, but we had a problem with it. Namely, everything we wrote sounded like a commercial. However, we are not paid to write anything like that, we are just truly excited about this awesome new toolkit from Standford – Stanza. Transfer learning seems to be the future of deep learning even when it comes to NLP. In the market, there are several NLP toolkits that provide various NLP options to engineers, like CoreNLP, Flair and spaCy. However, all these toolkits have some limitations. Majority of them supports only a few major languages, they are often under-optimized and have problem with input text that has been tokenized with some other tool. This means that these tools are limited when it comes to text that comes from multiple sources and a number of languages.

That is why authors present Stanza, an open-source Python natural language processing toolkit supporting whooping 66 human languages. Stanza has multiple advantages. It has a fully neural pipeline that takes raw text as input and as outputs annotations including tokenization, multi-word token expansion, lemmatization, part-of-speech and morphological feature tagging, dependency parsing, and named entity recognition. Apart from that, their design is language agnostic, which gives the ability to support 66 languages. Finally, it’s performance is fantastic. On top of that Stanza provides Python API for Java Standford CoreNLP and pre-trained models.

In an essence, Stanza is composed of two main layers:

- Neural multilingual NLP pipeline

- Pyhon interface to Java CoreNLP

The pipeline is composed of multiple models whose purpose varies from tokenization to static analysis. Every one of these components is designed with processing many human languages and modularity in mind. Stanford’s Java CoreNLP is a really good tool with multiple options, especially when it comes to the English language. However, this thus far, it was hard to utilize this tool within Python. The second big module of Stanza is exactly the API to the CoreNLP. Stanza is easily installed with pip install stanza. Keep in mind that you should install PyTorch beforehand. Here is how Stanza is used on this Marcus Aurelius quote:

import stanza

stanza.download('en')

doc = stanza.Pipeline(use_gpu=False) # Default neural pipeline in English on CPU

doc = nlp("When you arise in the morning, think of what a precious privilege it is to be alive - \

to breathe, to think, to enjoy, to love.")

doc.sentences[0].print_dependencies()The last line of the code gives output that looks like this:

('When', '3', 'mark')

('you', '3', 'nsubj')

('arise', '8', 'advcl')

('in', '6', 'case')

('the', '6', 'det')

('morning', '3', 'obl')

(',', '8', 'punct')

('think', '0', 'root')

('of', '10', 'case')

('what', '8', 'obl')

('a', '13', 'det')

('precious', '13', 'amod')

('privilege', '10', 'nsubj')

('it', '15', 'nsubj')

('is', '10', 'acl:relcl')

('to', '18', 'mark')

('be', '18', 'cop')

('alive', '15', 'xcomp')

('-', '8', 'punct')

('to', '21', 'mark')

('breathe', '8', 'advcl')

(',', '21', 'punct')

('to', '24', 'mark')

('think', '21', 'conj')

(',', '24', 'punct')

('to', '27', 'mark')

('enjoy', '21', 'conj')

(',', '27', 'punct')

('to', '30', 'mark')

('love', '27', 'xcomp')

('.', '8', 'punct')The cool thing is that you can add various processors to the pipeline and build whatever you need. Processors are supporting single operations and in the previous example, we used them all. Here is the list of processors:

- tokenize – Tokenizes the text and performs sentence segmentation.

- mwt – Expands multi-word tokens (MWTs) into multiple words when they are predicted by the tokenizer.

- pos – Labels tokens with their universal POS (UPOS) tags, treebank-specific POS (XPOS) tags, and universal morphological features (UFeats).

- lemma – Generates the word lemmas for all words in the Document.

- depparse – Provides an accurate syntactic dependency parsing analysis.

- ner – Recognize named entities for all token spans in the corpus.

So, technically we could do something like this:

import stanza

stanza.download('en')

doc = stanza.Pipeline(processors: 'tokenize, mwt, pos', use_gpu=False)

doc = nlp("When you arise in the morning, think of what a precious privilege it is to be alive - \

to breathe, to think, to enjoy, to love.")

doc.sentences[0].print_dependencies()If you want to try out Stanza, there is even an interactive web demo that runs the pipeline here.

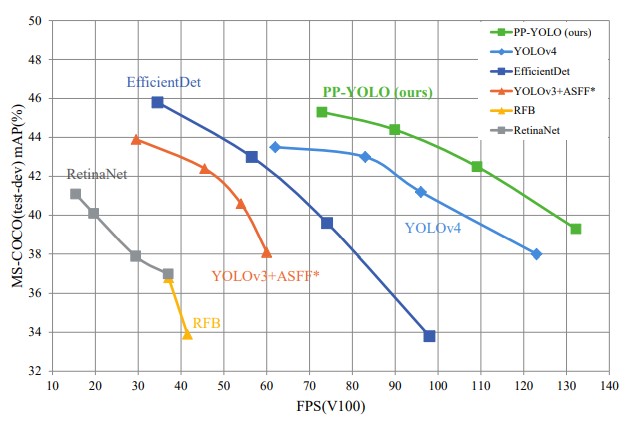

YOLOv4: Optimal Speed and Accuracy of Object Detection

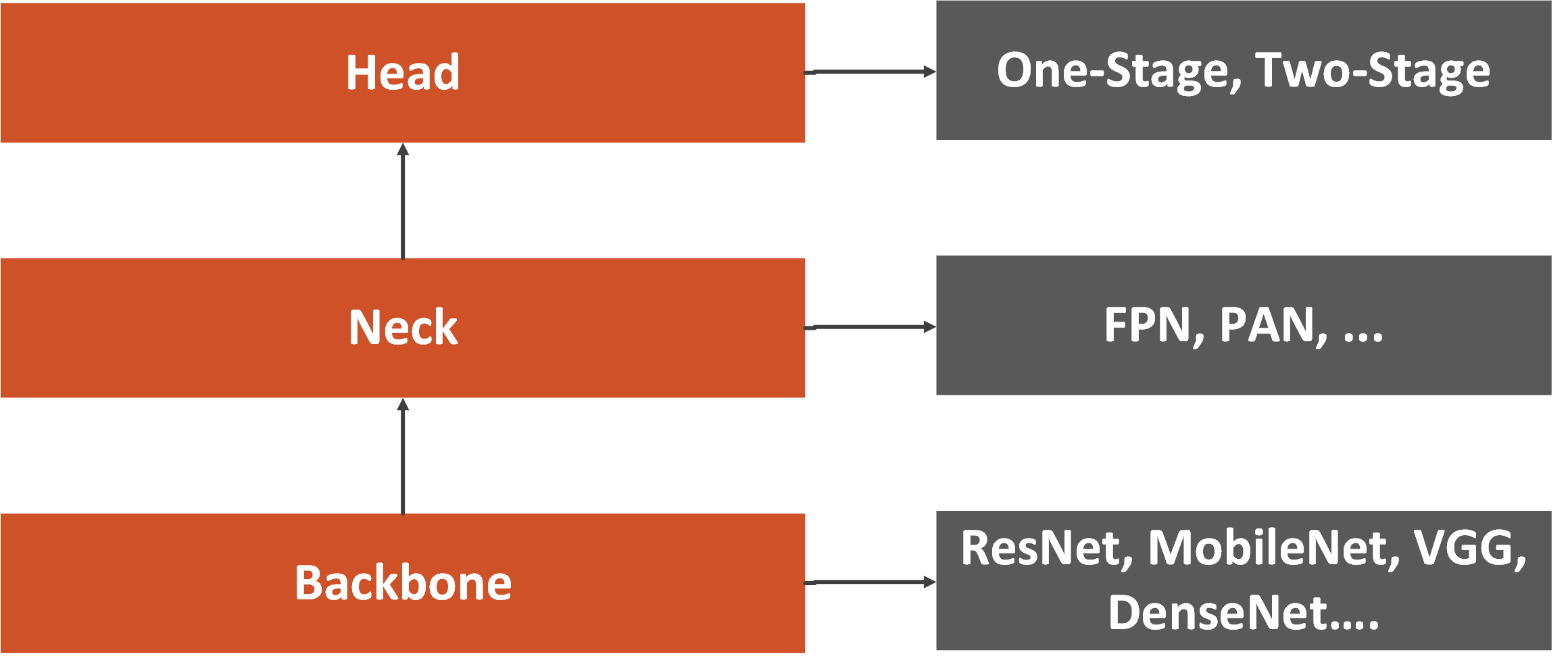

Every time there is a new version of YOLO, there is a small celebration among engineers that work on computer vision problems. YOLO or You Only Look Once is a real-time object detection neural network. It is quite neat and quick. This new improved version of YOLO addresses problems of Convolutional Neural Networks that operate in real-time on a conventional GPU. The paper focuses on developing an efficient, powerful, and high-accuracy object-detection model that can be quickly trained on standard GPU. Keep in mind that this year we had a chance to see YOLOv5 too, but not officially and in the form of a research paper. Essentially, the object-detection neural network is usually composed of three parts. The authors named them backbone, neck and head. Backbone is usually deep architecture that was pre-trained on the ImageNet dataset without top layers as we use for transfer learning.

This can be any of the “large networks” like ResNet, DenseNet, a variation of VGG, or MobileNet if you plan to run your object-detection model on CPU. The neck is usually composed of several layers whose goal is to collect feature maps from different stages. This may be Feature Pyramid Network or maybe Path Aggregation Network. Finally, the head is a part of the object detection model that is used for the prediction of custom classes and drawing bounding boxes around objects. Based on the type of the head, we distinguish two types of object-detection models :

- One-stage object-detection models – Detect class of the object directly from the image

- Two-stage object-detection models – First detect a region of interest (image-segmentation), and then use this region classification.

Authors introduce two terms Bag of freebies (BOF) and Bag of specials (BOS). Bag of freebies refers to the methods that affect training strategy. One such method is data augmentation, which is used to increase the variability of the input images and make the model has higher robustness. Other methods that could be considered as Bag of freebies are random erase, CutOut, grid mask, DropOut, DropConnect, etc. All these methods temper with the input images and/or feature maps and remove bias from input data. Finally, Bag of freebies could be some objective functions like Bounding Box (BBox). Bag of specials is post-processing modules and methods that do increase the inference cost but improve the accuracy of object detection as well. These can be any methods enhancing certain features of a model. For example, that can be enlarging receptive field, introducing attention mechanism, or strengthening feature integration capability, etc.

Based on all of these, the architecture of YOLOv4 consists of the following parts:

• Backbone: CSPDarknet53 – Cross Stage Partial Network minimizing required heavy inference computations from the network architecture perspective.

• Neck: Spatial Pyramid Pooling – SPP (so object-detector can receive images of arbitrary size/scale) and Path Aggregation Network – PAN (boosting information flow in proposal-based instance segmentation framework)

• Head: YOLOv3

• Bag of Freebies (BoF) for backbone: CutMix and Mosaic data augmentation, DropBlock regularization, Class label smoothing

• Bag of Specials (BoS) for backbone: Mish activation, Cross-stage partial connections (CSP), Multi input weighted residual connections (MiWRC)

• Bag of Freebies (BoF) for detector: CIoU-loss, CmBN, DropBlock regularization, Mosaic data augmentation, Self-Adversarial Training, Eliminate grid sensitivity, Using multiple anchors for single ground truth, Cosine annealing scheduler, Optimal hyperparameters, Random training shapes

• Bag of Specials (BoS) for detector: Mish activation, SPP-block, SAM-block, PAN path-aggregation block, DIoU-NMS

The result is the state-of-the-art object-detector that is faster than any alternative. The YOLOv4 results are located on the Pareto optimality curve and are superior to the fastest and most accurate detectors in terms of both speed and accuracy as you can see in the image above.

ResNeSt: Split-Attention Networks

Ok, this is another computer vision paper that caught our undivided attention (ba dam tus!). We already mentioned that image classification and object detection solutions use some deep convolutional networks (CNN) as a backbone and then build on top of that. This is done because learning low-level features, like curves and lines, is already done (in a better way) and we don’t need to do it again. One of the popular base architectures that are used is ResNet or some variation of it. They are simple, modular, and provide really good results. However, in order to improve the accuracy of a ResNet backbone architecture, we often need to do some kind of modifications within architecture itself.

Another impact on the accuracy of image classification models is improved with large scale neural architecture search – NAS. However, even though these NAS-derived models are having high accuracy their usage is limited. Essentially, they are not optimized for training efficiency, memory usage and processing on commercial hardware. The authors of this paper build on top of ResNet architecture and add Self-Attention blocks to it. As a result, a novel architecture called ResNeSt, is created. The goal was to create CNN backbone architecture for transfer learning that could be easily trainable and more robust. In a nutshell, feature-map split attention blocks are used instead of classical residual blocks. These attention blocks divide the feature-map into several groups and finer-grained subgroups. The feature representation of each group is then determined via a weighted combination of the representations of its splits. Here is what proposed Self-Attention Block looks like:

Every feature can be divided into several groups, driven by the hyperparameter K. This hyperparameter defines the number of feature-map groups, which are also called – cardinal groups. Another important hyperparameter is R, which defines the number of splits within a cardinal group itself. This means that number of cardinal groups is G = KR. On each individual group, the number of transformations may be applied and combined representation is calculated by applying element-wise summation across multiple splits. Then global average pooling is applied. Finally, the cardinal group representations are then concatenated along the channel dimension.

Apart from the Self-Attention block, additional changes on the ResNet architecture are also applied. Instead of using strided 1×1 convolutional blocks, authors used average pooling 3 × 3 layers. This way spatial information is preserved. Apart from that, the first 7 × 7 convolutional layer is replaced with three 3 × 3 convolutional layers, based on the propositions from this paper. Finally, 2 × 2 average pooling layer is added to the shortcut connection prior to the 1 × 1 convolutional layer. The resulting novel architecture boosts performance across image classification, object detection, instance segmentation and semantic segmentation.

Training with Quantization Noise for Extreme Model Compression

Today’s neural network architectures can have a huge memory footprint. For example, ResNet50 architecture that we mentioned earlier has over 23 million trainable parameters. This makes their usage in embedded systems somewhat limited. There are several techniques that can help to reduce the size of the network. Various pruning and distillation techniques remove parameters by reducing the number of network weights, or model compression techniques that reduce memory footprint of the model. One of the interesting techniques, that is used in this paper is quantization. The aim of this technique is to reduce the number of bits per weight, which means that one can further optimize the model even if it is already optimized the neural network architecture point.

These methods essentially compress the weights matrix W by assigning to each block bkl an index that points to a codeword c in a codebook C. Then they store the codebook C and the resulting indices instead of the real weights. Finally, during the inference, they reconstruct an approximation of the original matrix W using stored information. However, quantization techniques used thus far made approximations that induced a significant drop in performance. That is why the authors proposed quantization during the training process using quantization noise. They rely on already proven techniques like Quantization Aware Training and their approach can work with the various quantization methods. Another cool thing about it is that it can be applied to trained networks as a post-processing step.

In the core of the proposed solution lies the Quant-Noise technique that is applied only to a random subset of weights during training. Using this technique in combination with Product Quantizer gives us the best trade-off between accuracy and model size. Product Quantization is a method that works on groups of weights. It splits each column of the weights matrix W into m subvectors and learns the same codebook for each of the resulting subvectors.

So, the whole thing works something like this:

– Pick the quantization method that is used.

– Calculate blocks bkl related to the quantization method.

– During each forward pass randomly select a subset of these blocks.

– Apply some noise to them.

– During the backward pass compute gradients for all the weights, using a straight-through estimator (STE) for the distorted weights.

In the paper, you can see how noise is applied to different quantization techniques. The results are really interesting, proving that this approach provides high compression rates at a reasonable cost in accuracy.

End-to-End Object Detection with Transformers

We had to put this paper in this month’s edition because its simplicity and the results are just awesome. As its title suggests, authors of this paper used Transformers to simplify object detection pipelines. In essence, they aimed to address the problems of modern object detectors, by removing preprocessing and postprocessing tasks that influence their performance. These end-to-end techniques already proved itself in machine translation and speech recognition tasks, but thus far they were not used for image recognition, even though there were indications that this is where the field is heading. The proposed solution called DETR for DEtection TRansformer utilizes encoder-decoder structure of the Transformer as well as the self-attention mechanisms to predict all objects in the image at once. Its architecture is really simple and is composed of three main parts Backbone CNN, Transformer and Feed Forward Neural Network. Full architecture looks something like this:

For the Backbone CNN, the authors used standard implementation of ResNet. Once it creates feature maps, the Transformer encoder uses 1×1 convolution to further reduce channel dimension and creates new smaller feature maps. These maps are then compressed to one dimension since encoder expects one-dimensional input. Encoder itself is a standard Transformer encoder that is composed of a self-attention module and feed-forward neural network. The same is with the Transformer decoder. It uses standard multi-headed self-attention mechanisms to transform embeddings. The only difference is that this approach decodes all objects at once and not one by one. In the end, the 3-layer feed-forward neural network is used for predictions. This design of DETR can easily be extended to more complex tasks.

As the authors suggested the amazing thing is that this architecture can be implemented within 50 lines of code with PyTorch. Here is how:

from PIL import Image

import requests

import matplotlib.pyplot as plt

%config InlineBackend.figure_format = 'retina'

import torch

from torch import nn

from torchvision.models import resnet50

import torchvision.transforms as T

torch.set_grad_enabled(False);

class DETRdemo(nn.Module):

def __init__(self, num_classes, hidden_dim=256, nheads=8,

num_encoder_layers=6, num_decoder_layers=6):

super().__init__()

# create ResNet-50 backbone

self.backbone = resnet50()

del self.backbone.fc

# create conversion layer

self.conv = nn.Conv2d(2048, hidden_dim, 1)

# create a default PyTorch transformer

self.transformer = nn.Transformer(

hidden_dim, nheads, num_encoder_layers, num_decoder_layers)

# prediction heads, one extra class for predicting non-empty slots

# note that in baseline DETR linear_bbox layer is 3-layer MLP

self.linear_class = nn.Linear(hidden_dim, num_classes + 1)

self.linear_bbox = nn.Linear(hidden_dim, 4)

# output positional encodings (object queries)

self.query_pos = nn.Parameter(torch.rand(100, hidden_dim))

# spatial positional encodings

# note that in baseline DETR we use sine positional encodings

self.row_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

self.col_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

def forward(self, inputs):

# propagate inputs through ResNet-50 up to avg-pool layer

x = self.backbone.conv1(inputs)

x = self.backbone.bn1(x)

x = self.backbone.relu(x)

x = self.backbone.maxpool(x)

x = self.backbone.layer1(x)

x = self.backbone.layer2(x)

x = self.backbone.layer3(x)

x = self.backbone.layer4(x)

# convert from 2048 to 256 feature planes for the transformer

h = self.conv(x)

# construct positional encodings

H, W = h.shape[-2:]

pos = torch.cat([

self.col_embed[:W].unsqueeze(0).repeat(H, 1, 1),

self.row_embed[:H].unsqueeze(1).repeat(1, W, 1),

], dim=-1).flatten(0, 1).unsqueeze(1)

# propagate through the transformer

h = self.transformer(pos + 0.1 * h.flatten(2).permute(2, 0, 1),

self.query_pos.unsqueeze(1)).transpose(0, 1)

# finally project transformer outputs to class labels and bounding boxes

return {'pred_logits': self.linear_class(h),

'pred_boxes': self.linear_bbox(h).sigmoid()}The results on COCO 2017 detection and panoptic segmentation datasets are in the line with Faster R-CNN. However, this approach is not performing as well on the smaller objects as does Faster R-CNN. Even though there is a lot of room for improvement, this novel idea is going to change the game. We can not wait to see what other interesting concepts will spur from it.

Language Models are Few-Shot Learners

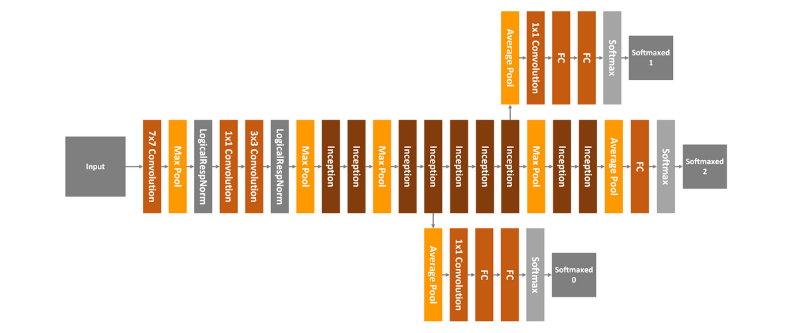

The background of this fascinating paper, released by researchers from Open AI, lies in the fact that transfer learning is becoming dominant in NLP. Meaning that industry is heavily using models that are pre-trained on a large corpus of text and then fine-tune them on a specific task. Fine-tuning itself can be time-consuming. On the other hand, humans can perform a new language task from only a few examples, which is something that NLP models are trying to achieve (even though they are still far away from it). In order to improve that and generate more task agnostic solution, OpenAI trained GPT-3 model with 175 billion parameters and tested its performance without any fine-tuning. As expected, they achieve some amazing results. Just for comparison, last year’s GPT-2 had 1.5 billion parameters and this month Microsoft introduced (until now) the largest Transform based language model that had 17 billion parameters. So, yes, GPT-3 is a huge autoregressive model trained with unsupervised learning and few-shot learning.

Architecturally speaking, there are no changes from the GPT-2 model. All the nitty-gritty details like modified initialization, pre-normalization and reversible tokenization are the same. The only difference is that that this time authors used alternating dense and locally banded sparse attention patterns in the layers of the transformer. Also, this large GPT-3 model was not the only model that is trained for the purposes of this paper. There are 8 models, with parameters variating from 125 million to 175 billion parameters:

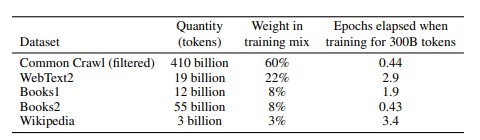

In this table, we can also see the sizes of the batches used for model training. These models are trained on following datasets:

The results from all the categories are mindblowing. For example, for traditional language modeling tasks, GPT-3 sets a new SOTA on the Penn Tree Bank dataset by a margin of 15 points based on zero-shot perplexity. GPT-3 showed amazing results in question answering tests. In general, these tests are separated into open-book and closed-book tests. Due to the number of possible queries, open-book tests use an information retrieval system to find relevant text and then the model learns to generate the answer from the question and retrieved text. Closed-book tests don’t have this retrieval system.

GPT-3 achieved 64.3% in the zero-shot setting, 68.0% in the one-shot setting, and 71.2% in the few-shot setting closed-book tests on the TriviaQA dataset. It outperformed fine-tuned T5-11B by 14.2% in a zero-shot setting. Note that T5-11B is finetuned, while GPT-3 is not. It is interesting that on translation tasks, GPT-3 also sets new SOTA when it comes to translation into English. It outperforms previous unsupervised NMT work by 5 BLEU. For the other tasks, like Winograd-Style Tasks, Common Sense Reasoning and Reading Comprehension, GPT-3 also proved it’s superiority. Read more in the paper about it.

Since GPT-3 was focused on task-agnostic performance, it was not fine-tuned. This means that there is a lot more room for improvement and that we will see some results in that field rather soon.

Read the complete paper here.

AdaBelief Optimizer: Adapting Stepsizes by the Belief in Observed Gradients

One of the most important components, when it comes to building deep learning and machine learning systems, is an optimizer. Optimizers are generally categorized as adaptive methods (like Adam) and accelerated schemes (like Nesterov accelerated gradient or Stochastic Gradient Descent with momentum). When choosing an optimizer for our solution, we need to consider some trade-offs. For example, Adam works best for GANs, but it generalizes worse compared to SGD for Convolutional Neural Networks. That is why the authors of this paper propose the AdaBelief optimizer, which aims to solve three optimization problems: fast convergence, good generalization, and training stability.

This optimizer is modified Adam. If we denote the observed gradient at step t as gt and its exponential moving average (EMA) as mt. Also, we denote the EMA of gt² and (gt − mt)² as vt and st, respectively. In the Adam algorithm mt is divided by √vt in Adam, while it is divided by √st in AdaBelief. Intuitively, √(1/st) is the “belief” in the observation. This means that viewing mt as the prediction of the gradient if gt deviates much from mt, ie. we have weak belief in gt, and take a small step. On the other hand, if gt is close to the prediction mt, we have a strong belief in gt, and take a large step.

As you can see, the idea is to adapt step size based on the difference between predicted and expected gradient. If the expected gradient deviates from the prediction the step will be small. Similarly, when the expected value is close to prediction, the step will be large. The experiments confirm that authors were successful in their intention and that this optimizer indeed achieves three goals simultaneously: fast convergence as in adaptive methods, good generalization as in SGD, and training stability in complex settings such as GANs. Furthermore, Adabelief has the same parameters as Adam, so it is easy to tune.

Unsupervised Translation of Programming Languages

If you worked in the software development industry, sooner or later you will face projects where you need to transfer part of functionality from one programming language to another. Sometimes the whole projects are translated from one programming language to another. These are expensive endeavors. There is a famous example of how Bank of Australia spent around $750 million and 5 years of work to convert its platform from COBOL to Java. Basically, translating functionality from one language to another is not easy. For big projects, you need to be experienced in both languages. Of course, there are a number of tools that can help you with this, in fact, some of these tools are integrated as a part of some programming languages.

For example, Typescript uses such a tool to convert its code into JavaScript. This way you can use an object-oriented approach and type checking, and still use built software in the majority of browsers. These tools are called transcompiler, transpiler, or source-to-source compiler. Their purpose is to convert code from one programming language to another, given that languages work on the same level of abstraction. Authors of this paper use unsupervised learning to do so. Note that they focused on use cases of translation an existing codebase written in an obsolete or deprecated language to a newer one.

In theory, translating one Turing-complete language into another should be always possible, however, in practice it is not so easy because of different syntaxes and rules. This becomes especially challenging if we want to translate dynamically typed language into a statically typed one or vice-versa. That is why the number of available transcompilation at the moment are rule-based, meaning they tokenize the input source code and convert it into an Abstract Syntax Tree (AST). After that, they utilize handcrafted rewrite rules. Creating such tools because of the many rules that should be provided is again lengthy and expensive. That is why the authors propose TransCoder, an unsupervised learning transcompiler that is able to translate code among three languages: Python, C++ and Java.

TransCoder is sequence-to-sequence (seq2seq) model with attention. It is composed of an encoder and a decoder with a transformer architecture. The same model is used for all programming languages. In general, it is based on three pillars:

- Cross Programming Language Model pretraining

- Denoising auto-encoding

- Back-translation

Cross Programming Language Model (XLM) pretraining is one of the most important ingredients of this model. This process ensures that programming sequences with similar meaning are represented with similar latent representations. If this sounds familiar, that is because it is! Essentially authors are using well known NLP tricks for programming languages. Brilliant!

In a nutshell, a Cross-lingual Language Model (XLM) is pretrained with a masked language modeling objective on monolingual source code datasets and as a result, pieces of code that express the same instructions are mapped to the same representation, regardless of the programming language. XLM is used to initialize the TransCoder model. However, this on its own is not enough, because the decoder part of the transformer architecture requires additional attention parameters which are initialized randomly. That is how the first part of unsupervised training (initialization is done). The second part of this training, i.e. language modeling is done by training the model to encode and decode sequences with a Denoising Auto-Encoding (DAE) objective. This means that model is trained to predict a sequence of tokens given a corrupted version of that sequence. Corruption of the sequences is done by randomly masking, removing and shuffling input tokens.

In the end, the authors used Back-translation. The previous two steps of the training would be enough for this model, but in order to increase the quality of generated code authors added this step. In general, this process two models are trained: source-to-target and target-to-source. The purpose of target-to-source model is to generate noisy translations of the source language from the target language. These generated sequences of noisy codes are then used to train the source-to-target model. The two models are trained in parallel until convergence.

The results of TransCoder are awesome. TransCoder successfully understands the syntax specific to each language, learns data structures and their methods, and correctly aligns libraries across programming languages.

Read the complete paper here.

DeepFaceDrawing: Deep Generation of Face Images from Sketches

By now you have probably seen the results of this paper somewhere on the web. It is truly amazing how the solution proposed in it transforms sketches into face images. This is a very attractive field since it’s applications are many from character design to criminal investigations. In general, it would be cool to have such drawing assistance at your disposal. Thus far similar solutions used sketches as hard constraints, which didn’t always give good results. This is why the authors of this paper suggest the solution that is utilizing recent advances in image-to-image translation and with that use sketches as soft constraints to guide image synthesis. Basically, they form loose points from the sketch and then use deep learning to “fill” missing parts.

The solution relies heavily on some recent advances in deep learning, especially from conditional face generation. To be more precise the authors relied on Condition GANs and pix2pix principles for the image synthesis parts of the architecture. Apart from that, data preparation for this architecture is a bit specific, but it also provides aimed flexibility. The authors couldn’t use datasets with sketches, like CUHK face sketch database, because these contain shading effects, which authors wanted to avoid. They have built dataset form face image data of CelebAMask-HQ, which contains high-resolution facial images with semantic masks of facial attributes and processed with holistically-nested edge detection, APDrawingGAN and Photoshop’s Photocopy filter.

The architecture itself is composed of three modules. The first module is Component Embedding or CE. This component of the system utilizes autoencoder architecture on overlapping windows centered at individual face components and learns five features form the sketch data. To be more specific, it learns “left-eye”, “right-eye”, “nose”, “mouth and “remainder” features. For each feature separate autoencoder is used and each of them is composed of five encoding layers, five decoding layers, and the latent representation (the code represented with dense layer). Additional residual blocks are added after every convolution/deconvolution operation for better results. The other two modules Feature Mappings (FM) and Image Synthesis (IS) are in charge of conditional image generation and used to map component feature vectors to realistic images.

FM contains five parts, for each feature that CM detected. These five decoding models converting feature vectors to spatial feature maps. Each of these five models has a fully connected layer followed by five decoding layers. Once spatial feature maps are created they are merged back to the “reminder” feature map, putting the whole face back together. Finally, the IS module converts this feature map to the realistic face image. This module is similar to the mentioned conditional GAN, and it takes a feature map as an input to the generator. The generator is composed of an encoding section, a residual block and a decoding unit. It is important to note that this complete architecture is trained in two phases. The first phase trains only CM, while the other is in charge of training FM and IS.

Read the complete paper here.

PP-YOLO: An Effective and Efficient Implementation of Object Detector

YOLOv3 became a sort of industry standard when it comes to object detection due to its excellent effectiveness and efficiency. Many of our clients are using this architecture for this important computer vision task. A couple of months ago, the YOLOv4 paper was published. Unlike previous versions of YOLO, this paper doesn’t make big changes architecturally speaking, but focuses on various strategies such as a bag of freebies and bag of specials on the basis of YOLOv3. Improving performances of YOLOv3 seems to be the main goal, not it’s redefinition.

This paper focuses on that as well. In fact, it is not proposing some novel approach or architecture, but it provides effective guidelines that leed to better performance but don’t affect the accuracy. It provides valuable tips and tricks for developers. The model that the authors proposed is called PP-YOLO because it majority of tricks are based on PaddlePaddle. The final result improves the mAP on COCO from 43.5% to 45.2% at a speed faster than YOLOv4, so let’s check to see what these tricks are all about.

The proposals from this paper cannot be directly applied to the network structure of YOLOv3 and small modifications are required. So, let’s go through the YOLOv3 structure before we dive into tips and tricks this paper proposes. YOLOv3 belongs to a one-stage object detector which is composed of backbone network (usually ResNet), a detection neck (usually FPN), and a detection head for object classification and localization.

As a backbone YOLOv3 uses DarkNet-53. The authors replace the backbone with ResNet-50-vd-dcn. This network is essentially ResNet-50-vd with the addition of deformable convolutional layers (DCN), ie. 3 × 3 convolution layers in the last stage are replaced with DCNs. Some minor changes are performed in the FPN network that is used as a detection neck. As a detection head, YOLOv3 uses a pretty simple Convolutional Neural Network that has one 3×3 convolutional layer followed by an 1×1 convolutional layer. Below you can find the complete architecture along with the injection points of PP-YOLO, ie. changes made by this paper.

Ok, let’s go through the list of tips proposed in this article. Note that some of these tricks can be applied to YOLOv3 directly:

- Larger Batch Size – Increase training batch size from 64 to 192, and adjust the training schedule and learning rate accordingly.

- Exponential Moving Average (EMA) – During the training process, maintaining moving averages of the trained parameters can be useful since evaluations that use averaged parameters sometimes produce significantly better results. Authors thus suggest maintaining shadow parameter (WEMA) for each parameter W, which is used for evaluation: WEMA = λWEMA + (1 − λ)W

- DropBlock on FPN – Use dropout only on FPN, not on the whole YOLOv3 network.

- IoU Loss – YOLOv4 replaces the L1-loss, which is used in YOLOv3 for bounding box regression with IoU loss. Unlike that approach, authors just add another branch to calculate IoU loss.

- IoU Aware – Since in YOLOv3 detection confidence is calculated by multiplying classification probabilities and objectness score, localization accuracy is not taken into consideration. In order to solve this problem, another IoU branch is added to measure the accuracy of localization.

- Grid Sensitive – This trick is introduced by YOLOv4. Since it is difficult to predict centers of bounding boxes located on the grid boundary, formulas for calculating coordinates of these centers are modified:

x = s · (gx + 1.05 · σ(px) − (1.05 − 1)/2)

y = s · (gy + 1.05 · σ(py) − (1.05 − 1)/2) - Matrix NMS – Matrix NMS is technically Soft-NMS done in parallel. That is why it is faster than traditional NMS.

- CoordConv – The 1×1 convolution layer in FPN and the first convolution layer in the detection head are replaced with CoordConv layers. These layers allow networks to learn either complete translation invariance or varying degrees of translation dependence. Essentially it adds extra coordinate channels and thus provides convolution access to its own input coordinates.

- SPP – Unlike YOLOv4 SPP is only applied on the top feature map. On the image above it is marked with ”star”.

- Better Pretrain Model – This is sort of obvious, but if you use a model that was trained better for the backbone the results will be better.

This approach proves to be really good since PP-YOLO is faster (FPS) and more accurate(COCO mAP) than EfficientDet and YOLOv4. The results can be seen in the graph above.

Learning to Match Distributions for Domain Adaptation

This paper addresses a big problem in our industry. We usually train our predictive models on the training dataset and then test it on the testing dataset. There is always a drop in the performance between training data and testing data. This we accept as normal because we know that these datasets don’t have the same distribution. The problem is that our approach generally assumes that. We try to minimize that gap by getting more data, but that can be expensive.

To ensure consistent performance of predictive models domain adaptation is performed. The main aim of this process is to match the cross-domain joint distributions. However, this is not always possible, plus in unsupervised we don’t have a target domain. Theoretically, matching the joint distributions by matching the marginal and conditional distributions should do the trick and solutions presented thus far are using this approach. Still, applying it to new applications remains problematic.

The authors of this paper focused on making data-driven solutions that is inspired by deep learning. As you know, the main advantage of deep learning over traditional machine learning is that it can extract features from the data, so the authors apply a similar principle to the automatic distribution matching strategy. Their solution Learning to Match (L2M) is a framework that is able to combine the deep features and human-crafted features. In essence, it is having GAN-ish architecture and it is composed of two networks meta-network (feed-forward network used as approximator) and feature generator.

The complete methodology of the proposed solution can be broken down into several steps and utilizes architecture from the image above. Architecture is composed of four parts: feature extractor, label classifier, meta-network, and matching feature generator. Feature Extractor is a CNN network that is in charge of extracting features from the input domain. The label classifier minimizes the prediction loss on the labeled source domain. Meta network is the feed-forward neural network used to match cross-domain distributions and learns distribution matching functions. Finally, a feature generator is used to generate useful inputs to the meta-network.

As mentioned, there are two kinds of matching features. The first kind, the task-independent features are general. They can be automatically extracted by the feature extractor. The second kind, Human-designed features are the pre-defined features such as MMD or adversarial game. They are represented with box D in the image above.

If L2M is used for unsupervised learning or we are missing the target domain, so the optimization is performed by generating meta-data in a self-supervised manner. This meta-data is then used to update distribution matching loss. In each iteration, we randomly sample n instances for each class with high prediction scores. This is done feature extractor and the results are stored as the ground truth of the meta-data. This way the pseudo labels of the meta-data are getting better since we utilize data with the highest prediction probabilities.

The results are amazing. Experiments on public datasets substantiate the superiority of L2M over state-of-the-art approaches on DA and image generation tasks. The notable result can be seen in the COVID-19 X-ray image adaptation experiment, which is the representation of a highly imbalanced task, where this approach outperforms existing methods.

PyTorch Metric Learning

Many solutions in machine learning require measuring the distance between some points. Even highly popular solutions, like recommendations systems or NLP approaches, rely on this very point. There are a variety of standard approaches that could be used for this purpose like Euclidean distance, City-Block distance, Cosine similarity, etc. However, often designing specific metrics for a specific problem is a task on its own. In fact, there is a whole sub-field of machine learning dedicated to that called – distance metrics learning or simply metric learning. This approach aims to automatically construct distance metric for a specific data using supervised data.

In essence, metric learning measures the similarity among samples while using an optimal distance metric for learning tasks. These methods are, however, limited in solving real-world problems demonstrating non-linear characteristics. In the past couple of years, deep metric learning has emerged and attracted some attention. In general, deep metrics learning provides a better solution for nonlinear data through activation functions, and thus it is better for solving non-linear problems. PyTorch Metric Learning is an open-source library that provides various metrics learning algorithms, whose implementation would otherwise be time-consuming. This library has 9 modules, displayed in the image below.

These modules can be used separately or in combination for a complete train/test pipeline. Each of these modules has specific functionality. One of the most important ones is the Loss module which models loss functions. These work very much like regular PyTorch loss functions. However, as you can see in the image above its behavior can be modified and augmented with miners, distances, regularizers, and reducers. The whole process can be seen in the image below:

In the first step, miners find the best samples which are used for training. This is an important concept in metric learning. There are two types of miners provided in this library:

- Online miners – find the best tuples within an already sampled batch

- Offline miners – which determine the best way to create batches

So, miners find the best pairs (since we are working with the 2D distance matrix) in the current batch. Pytorch Metric Learning library provides an easy way to use them:

from pytorch_metric_learning.losses import CircleLoss

from pytorch_metric_learning.miners import MultiSimilarityMiner

loss_func = CircleLoss()

mining_func = MultiSimilarityMiner()

for data, labels in dataloader:

embeddings = model(data)

hard_tuples = mining_func(embeddings, labels)

loss = loss_func(embeddings, labels, hard_tuples)

loss.backward()These pars are used to index the distance matrix, which is modeled by the instance of distance class. The distance abstracts different types of distances (duh!). Loss function can be augmented with different distance objects which will change its behavior. For example, we might want our loss function to use Cosine Simmilarity instead of Euclidian Distance. Then we can do something like this:

from pytorch_metric_learning.losses import TripletMarginLoss

from pytorch_metric_learning.distances import CosineSimilarity

loss_func = TripletMarginLoss(distance = CosineSimilarity())In a nutshell, the Loss function uses a distance object to compute a pairwise distance matrix and then uses elements of this matrix to compute the loss. Additionally, every loss function has an optional embedding regularizer parameter. This is a common thing to do in the process of metric learning, to add embedding or weight regularization terms to the loss. Regularization loss is computed for each embedding in the batch. Here is how you can do it with PyTorch Metric Learning library:

from pytorch_metric_learning.losses import ContrastiveLoss

from pytorch_metric_learning.regularizers import LpRegularizer

loss_func = ContrastiveLoss(embedding_regularizer = LpRegularizer())As you are aware, the loss is calculated for pairs or triplets and then reduced to a single value by some operation, such as averaging. Losses objects can receive a reduction parameter which defines how this operation will be performed. For example:

from pytorch_metric_learning.losses import MultiSimilarityLoss

from pytorch_metric_learning.reducers import ThresholdReducer

loss_func = MultiSimilarityLoss(reducer = ThresholdReducer(low = 10, high = 30))

Other modules in this library are there to cover some edge cases. For example, some metric learning algorithms are more than just losses or mining functions. Some algorithms require additional networks, data augmentations, learning rate schedules etc. That is what trainers are used for. Together with HookContainer class, you can turn trainers into a complete train/test workflow, with logging and model saving. Here is an example of the complete pipeline that can be used for metric learning on MNIST dataset:

from pytorch_metric_learning import losses, miners, distances, reducers, testers

from pytorch_metric_learning.utils.accuracy_calculator import AccuracyCalculator

### MNIST code originally from https://github.com/pytorch/examples/blob/master/mnist/main.py ###

from torchvision import datasets

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

import numpy as np

### MNIST code originally from https://github.com/pytorch/examples/blob/master/mnist/main.py ###

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout2d(0.25)

self.dropout2 = nn.Dropout2d(0.5)

self.fc1 = nn.Linear(9216, 128)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

return x

### MNIST code originally from https://github.com/pytorch/examples/blob/master/mnist/main.py ###

def train(model, loss_func, mining_func, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, labels) in enumerate(train_loader):

data, labels = data.to(device), labels.to(device)

optimizer.zero_grad()

embeddings = model(data)

indices_tuple = mining_func(embeddings, labels)

loss = loss_func(embeddings, labels, indices_tuple)

loss.backward()

optimizer.step()

if batch_idx % 20 == 0:

print("Epoch {} Iteration {}: Loss = {}, Number of mined triplets = {}".format(epoch, batch_idx, loss, mining_func.num_triplets))

### convenient function from pytorch-metric-learning ###

def get_all_embeddings(dataset, model):

tester = testers.BaseTester()

return tester.get_all_embeddings(dataset, model)

### compute accuracy using AccuracyCalculator from pytorch-metric-learning ###

def test(dataset, model, accuracy_calculator):

embeddings, labels = get_all_embeddings(dataset, model)

print("Computing accuracy")

accuracies = accuracy_calculator.get_accuracy(embeddings,

embeddings,

np.squeeze(labels),

np.squeeze(labels),

True)

print("Test set accuracy (MAP@10) = {}".format(accuracies["mean_average_precision_at_r"]))

device = torch.device("cuda")

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

batch_size = 256

dataset1 = datasets.MNIST('.', train=True, download=True, transform=transform)

dataset2 = datasets.MNIST('.', train=False, transform=transform)

train_loader = torch.utils.data.DataLoader(dataset1, batch_size=256, shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset2, batch_size=256)

model = Net().to(device)

optimizer = optim.Adam(model.parameters(), lr=0.01)

num_epochs = 1

### pytorch-metric-learning stuff ###

distance = distances.CosineSimilarity()

reducer = reducers.ThresholdReducer(low = 0)

loss_func = losses.TripletMarginLoss(margin = 0.2, distance = distance, reducer = reducer)

mining_func = miners.TripletMarginMiner(margin = 0.2, distance = distance, type_of_triplets = "semihard")

accuracy_calculator = AccuracyCalculator(include = ("mean_average_precision_at_r",), k = 10)

### pytorch-metric-learning stuff ###

for epoch in range(1, num_epochs+1):

train(model, loss_func, mining_func, device, train_loader, optimizer, epoch)

test(dataset2, model, accuracy_calculator)TensorFlow Quantum: A Software Framework for Quantum Machine Learning

Deep Learning has been a hot topic in a previous couple of years. Google, with TensorFlow, certainly pushed the boundaries and brought these technologies to the mainstream. During this period another interesting technology had huge growth in both academia and industry – Quantum computing. The rapid development in the quantum hardware resulted in the equally rapid development of quantum applications. These new ways of processing data will eventually change the way we think about handling information on a computer in general and it will affect each field of computer science. That is how quantum machine learning (QML) algorithms emerged too. They tackle a wide range of applications in both supervised and unsupervised learning. TensorFlow Quantum is a new Google library intended to accelerate the development of quantum machine learning algorithms.

One of the main challenges is that all classical algorithms and data need to be changed for new quantum ways, so they can work with quantum processors. That is the case with machine learning algorithms as well. Apart from that, any data emerging from an underlying quantum mechanical process can be considered quantum data. The first generation of QML focused on utilizing quantum processing power for getting performance improvements on linear algebra calculations. The main benefit quantum processors became their ability to perform fast linear algebra on a state space that grows exponentially with the number of qubits. Recently, the second generation of QML emerged. These algorithms are focussed on heuristic methods which studied empirically due to the increased computational capability of quantum hardware. This is similar to how machine learning evolved into deep learning in the first place. These new algorithms use parameterized quantum transformations called parameterized quantum circuits (PQCs) or Quantum Neural Networks (QNNs).

Due to the current state of quantum processors, authors anticipate that investigations into various possible hybrid quantum-classical machine learning algorithms will be a productive area of research. Technically, this means that quantum computers will be most useful as hardware accelerators, working together with traditional computers. That is where we are at the moment and where TensorFlow Quantum (TFQ), a new quantum framework from Google, is headed to. The main goal of this framework is to bridge quantum computing and machine learning communities. In its essence, it is a combination of two libraries: TensorFlow (Google’s ML framework) and Cirq (Google’s Quantum framework).

In TFQ, circuits and other quantum computing constructs are represented as tensors. Converting these quantum tensors into classical information is done by ops via simulators or real quantum devices. To be more precise, Cirq objects are converted to TensorFlow string tensors using tfq.convert_to_tensor method. Here is a simple example of how that looks like:

qubit = cirq . GridQubit (0 , 0)

theta = sympy . Symbol (’theta ’)

c = cirq . Circuit ( cirq .X ( qubit ) ** theta )

c_tensor = tfq . convert_to_tensor ([ c] * 3)

theta_values = tf . constant ([[0] ,[1] ,[2]])

m = cirq . Z( qubit )

paulis = tfq . convert_to_tensor ([ m ] * 3)

expectation_op = tfq . get_expectation_op ()

output = expectation_op (

c_tensor , [’theta ’], theta_values , paulis )

abs_output = tf . math . abs ( output )Now, this example is simple and has no QNNs. In general, steps of building and training QNNs would be similar to the building standard neural networks. Here they are:

- Prepare Quantum Dataset – As with regular neural networks, we need to build a dataset first. This is done by creating unparameterized cirq.Circuit objects and then injecting them into a computation graph with tfq.convert_to_tensor.

- Evaluate Quantum Model – In this step, we evaluate how well our quantum model is performing. It’s main goal is to perform a quantum computation in order to extract information hidden in a quantum subspace.

- Sample or Average – In this step, we extract classical information in the form of samples from a classical random variable. Quantum state and measured observable impact distribution values of this random variable.

- Evaluate Classical Model – We use deep neural networks to distill correlations between measured expectations.

- Evaluate Cost Function – Cost function is calculated and evaluated based on the results from the previous step.

- Evaluate Gradients & Update Parameters – After evaluation

To find out how to create a minimal example of a hybrid quantum-classical model – binary classification, you can check this Jupyter notebook. Eventually, TensorFlow Quantum fits quite nicely into the current TensorFlow Ecosystem.

The Microsoft Toolkit of Multi-Task Deep Neural Networks for Natural Language Understanding

As we are aware, the world of deep learning is dominated by supervised learning. However, this situation is slowly changing. A lot of breakthroughs in the field caused resulted with the creation of the models become huge and hard to train. This is why transferred learning emerged. Today engineers use models that someone else pre-trained and then customize them for a specific task. In Neural Language Understanding (NLU) this paradigm shift is most noticeable and the development of these projects differs a lot from the current state of the rest of the field. This subfield follows a different paradigm. The process of building NLU models is based on the pre-training stage, which trains large neural networks like Transformers in self-supervision mode on a large unlabeled text corpus. The pre-training stage is followed by the fine-tuning stage, where the pre-trained model is applied to the specific task.

Since these models are huge, they are quite tricky to handle when it comes to deployment and performance. That is why the final stage of knowledge distillation is of utter importance. If done properly, this stage can compress a large model and ease up our deployment process. There are many tools out there that cover some parts of this process. However, none of these tools cover all the necessary steps. Often they are not providing adversarial training which is key when it comes to NLU fine-tuning tasks. This paper presents MT-DNN is a new open-source PyTorch based tool that comes from Microsoft. It is available here.

To install it, you need to have Python 3.6 and PyTorch installed on your local environment. Then you need to install requirements with the command:

pip install -r requirements.txtAfter requirements are installed, pull Docker container with command:

docker pull allenlao/pytorch-mt-dnn:v0.5Finally, run Docker container with:

docker run -it --rm --runtime nvidia allenlao/pytorch-mt-dnn:v0.5 bashYou can try it by training a toy MT-DNN model. First you need to download data using command:

sh download.shPre-processing is done with the command:

sh experiments/glue/prepro.shFinally, run the training with:

python train.pyOne of the key advantages of this toll is out-of-the-box adversarial training, multi-task learning and knowledge distillation. Users can also perform pre-training from scratch. Apart from that, this tool provides a number of the pre-trained NLU models, like BERT, RoBERT and UniLM. The workflow for creating models using MT-DNN goes as follows:

- Train a neural language model on a large amount of unlabeled raw text to obtain general contextual representations.

- Fine-tune the learned contextual representation on downstream tasks, e.g. GLUE (Wang et al., 2018).

- Distill this large model to a lighter one for online deployment. In the latter two phrases, we can leverage multi-task learning and adversarial training to further improve performance.

Adversarial training is available at fine-tuning and knowledge distillation tasks. The process of knowledge distillation is very interesting. It all starts with selecting a set of task-specific labeled training data. After that, an ensemble of neural nets, the so-called teacher, is trained for each task. The teacher is used to generate for each task-specific training sample a set of soft targets. A single MT-DNN, so-called student is trained using multi-task learning and backpropagation using these soft targets. The overall system architecture is based on Lexicon Encoders. Here is how it looks like:

In the input, which can be a sentence of a set of sentences, embedding is applied. As a result, this input is represented as the embedding vector. Then the encoder (Transformer or LSTM) captures contextual information for each word and generates contextual embedding vectors. In the end, for each task an additional task-specific layer is applied along with the operation for classification, similarity scoring or relevance ranking.

Conclusion

In this article, we had a chance to real about three really cool papers that, as we see them, are going to change the way we perform our jobs. Did you have any favorites this year? Let us know.

Thank you for reading!

Rubik's Code

Building Smart Apps

Eager to learn how to build Deep Learning systems using Tensorflow 2 and Python? Get our ‘Deep Learning for Programmers‘ ebook here!

Read our blog posts here.

Trackbacks/Pingbacks